Clinical Validation for Investors: Proof, Pilots, and Adoption

Clinical validation is not an academic checkbox.

For investors, it is a risk filter.

When investors ask about clinical validation, they are not trying to turn your startup into a research paper. They are trying to answer one fundamental question:

Will clinicians actually adopt this in real care?

In fundraising, clinical validation is mostly about execution risk. Investors are not asking whether your product is perfect. They are asking whether it will be used consistently, paid for, and renewed once it enters a real clinical workflow.

What investors are really evaluating is belief belief that clinicians will change behavior and that the product can survive workflow friction. In practical terms, this means understanding whether the product solves a painful problem (not a nice-to-have), fits naturally into existing workflows, gets used without founder involvement, and produces measurable improvement rather than just positive feedback.

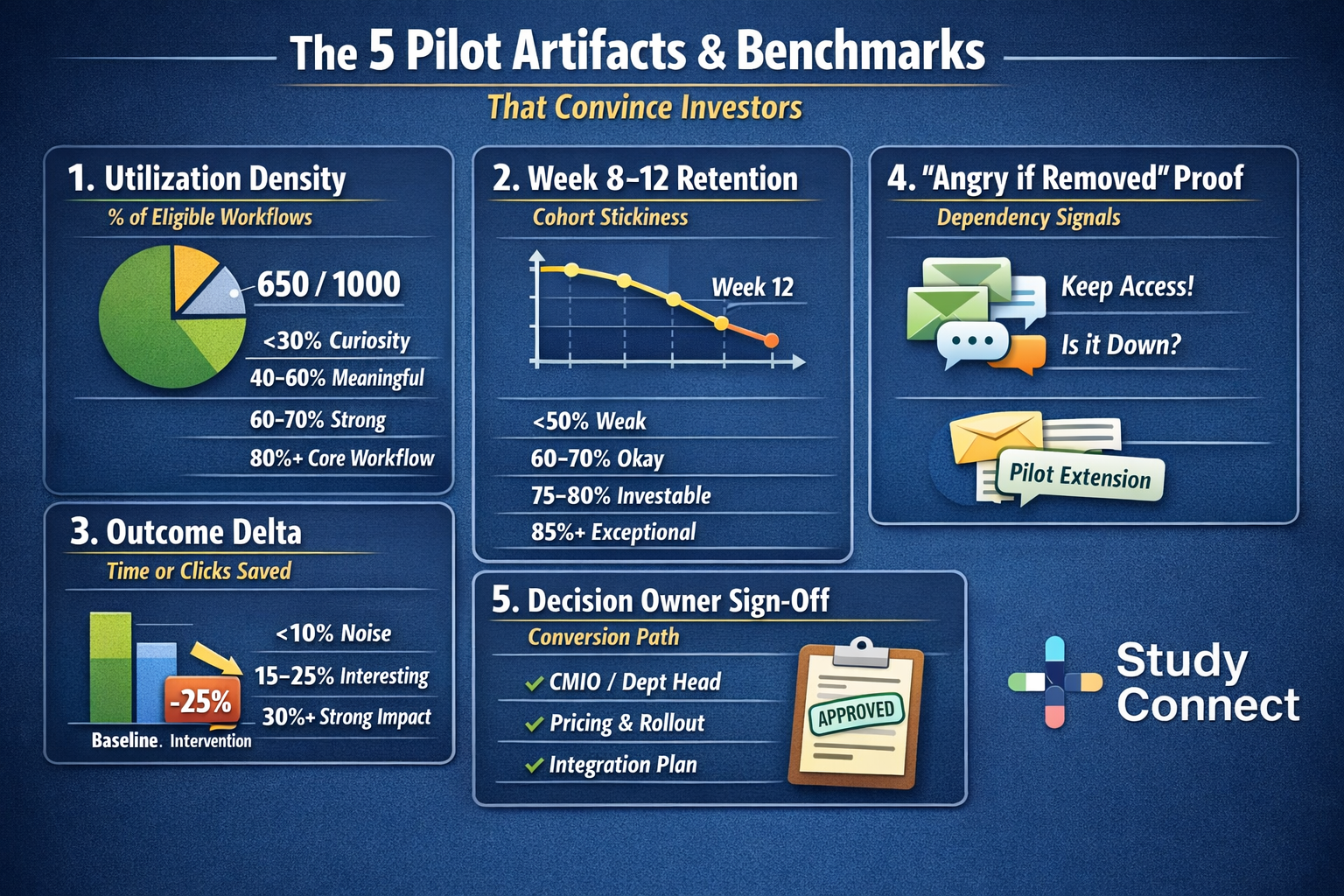

From diligence experience, opinions rarely move decisions. Behavior does. Usage patterns, retention over time, and clear before-and-after deltas matter far more than testimonials.

Why Clinical Validation Matters to Investors

Clinical validation reduces multiple risks at once. It lowers adoption risk (clinicians actually use it), regulatory risk (the pathway and timelines are understood), data risk (results hold up outside ideal conditions), and business model risk (buyers can justify spend through outcomes and ROI).

This is why many investors treat “no outcomes and no ROI” as a fast rejection. Strong validation shortens sales cycles, improves retention, strengthens reimbursement narratives, and removes ambiguity during fundraising.

The Evidence Investors Don’t Trust

Founders often fail not because they lack evidence, but because they bring evidence that does not reduce risk.

Positive feedback from clinicians is not validation. Polite enthusiasm does not equal behavior change. Investors know clinicians can like an idea without ever using it. What matters is whether the product is used repeatedly, on real patients, without hand-holding.

Regulatory pathways are also not validation. FDA clearance reduces uncertainty around approval, not adoption. Even after clearance, investors still ask the same question: will clinicians actually use this in live care.

Similarly, pilots alone do not prove validation. A pilot only matters if it converts to paid use, expands to additional departments or sites, sustains usage beyond the novelty phase, and produces buyer-signed ROI. Pilots that end quietly are treated as neutral evidence.

What Real Clinical Validation Looks Like

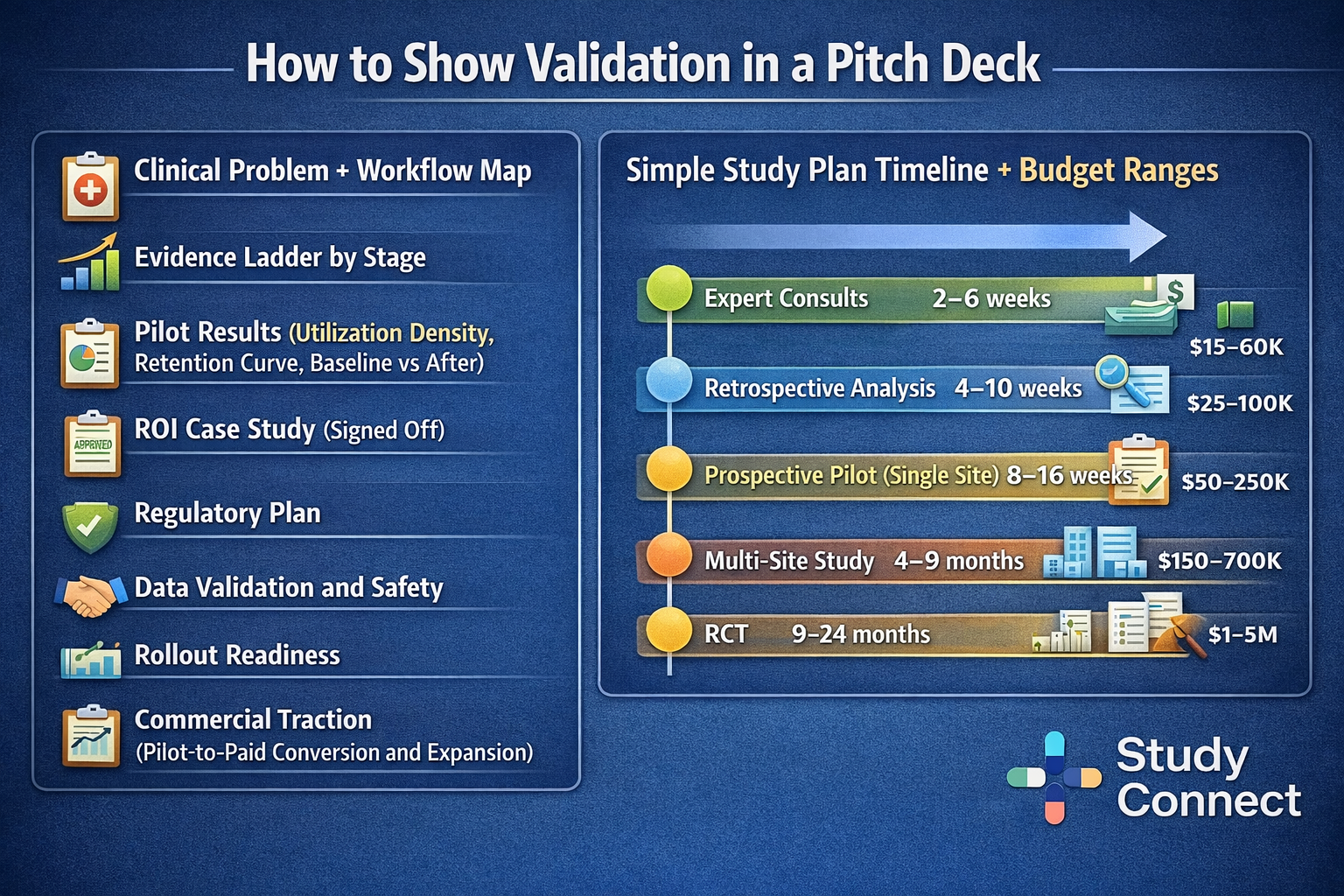

Investors apply different proof standards at different stages. The goal is not perfection - it is appropriate risk reduction.

At the earliest stages, investors look for clinician pull and workflow fit. Small numbers are acceptable if the signal is real: repeat use, in-workflow placement, and clear evidence that the product replaces something clinicians already do.

At seed, the focus shifts to stickiness and conversion. Usage metrics, cohort retention, pilot-to-paid conversion, and at least one credible ROI case study matter more than anecdotes.

By Series A, investors expect repeatable adoption. One strong pilot is no longer enough. They look for consistent utilization across sites or departments, renewals and expansions, standardized rollout and measurement processes, and operational readiness for enterprise deployment.

Case study: When the wrong validation gets dismissed

This happens even to strong teams. The key is to identify what proof is missing and swap the proof type.

Flatiron Health

Flatiron Health is a classic example of wrong validation early on. The founders collected strong verbal support from oncologists, including statements of need and willingness to buy, but early investors kept asking a different question: “Who is live today, with real patient data, in daily workflow?” The issue was not the problem; it was proof of dependency. Doctors had not paid the behavioral cost of switching and using it daily.Later, Flatiron embedded inside real oncology clinics and ran the product alongside existing systems. It was messy and unscalable at first, but it created the proof investors needed: daily use, real data, and real reliance.

The investor takeaway: no pain, no proof (behavior beats praise)

What changed investor belief was not more praise. It was behavior: clinics live in production, clinicians entering real patient data daily, workflow reliance for reporting and outcomes work, complaints when access was limited or the system was slow, and early switching costs forming. This is the pattern investors trust: cost paid → behavior change → measurable delta → budget follows.

How to apply this to your startup

Keep this as a short numbered list (because it’s genuinely a “do this” sequence):

- Replace letters with live workflow trials (even if small)

- Instrument usage from day 1 (utilization density, retention cohorts, eligible workflow definitions)

- Measure a before-and-after outcome (time saved, throughput, reduced rework, fewer clicks)

- Get sign-off from a decision owner (CMIO, department head, ops leader)

- Design the conversion path (decision date, pricing model, rollout steps, integration plan)

If you need faster access to credible clinicians and study experts, this is the gap StudyConnect is built to reduce - finding the right people to validate workflow reality, endpoints, and study design early.

Get in touch with the team at StudyConnect at matthew@studyconnect.world to help you connect with the right medical advisor.

Designing studies that investors trust

Pick the right proof type: retrospective vs prospective vs RWE vs RCT

Each proof type answers a different investor fear. Retrospective analysis is fast and cheap (if data access exists) and works well for early signal and hypothesis testing, but can suffer from selection bias and confounding. Prospective pilots are stronger because they reflect current workflow and allow adoption metrics and before-and-after outcomes, but they are still not randomized. RWE draws from real practice deployments and supports generalizability, but must handle bias carefully. RCTs are the most credible for efficacy when feasible and provide a clearer bridge to regulatory-grade and adoption-grade claims.

A practical stage mapping stays consistent: pre-seed often combines retrospective analysis with a small prospective workflow pilot; seed focuses on prospective pilots with strong instrumentation and may expand to multi-site observational work; Series A typically expects multi-site prospective evidence and may justify RCTs when they unlock reimbursement or major adoption barriers.

Endpoints that matter: workflow, outcomes, and buyer ROI

Choose endpoints that map to a buyer’s budget logic. Keep endpoints measurable and specific, and avoid “improved satisfaction” as a primary endpoint unless it ties to a hard KPI.

High-trust endpoints investors understand include:

- utilization density

- week 8–12 cohort retention

- minutes saved per encounter

- reduced after-hours work

- throughput

- fewer errors or reduced rework

- improved coding and revenue capture (when relevant)

- fewer missed follow-ups or delayed actions (in inbox/care gaps tools)

Clinical data validation basics: avoid biased data and weak evaluation

Clinical data validation prevents diligence from collapsing late. Founders should be able to explain data sources and inclusion criteria, missingness patterns, labeling method (and consistency checks), train/test separation and time-split where appropriate, drift monitoring, subgroup performance, and leakage checks.

Regulators and standards (FDA/MHRA/CE): what to say in investor diligence

Investors do not need you to pretend you’re already approved. They want a credible plan and clean language: intended use statement (plain English), risk classification assumptions and rationale, pathway hypothesis (FDA 510(k), De Novo, PMA; or UKCA/MHRA; or CE), standards you are aligning to as relevant, what clinical evidence you will generate by stage, and what post-market monitoring will look like - especially if you’re using real-world data.

Turning conversations into real clinical validation

Most medical startups don’t fail because the idea is bad , they fail because early validation is shallow.

Founders often talk to the first available clinicians, not the right mix of clinical, operational, research, and regulatory experts needed to test feasibility properly.

StudyConnect exists to fix that. It gives founders fast access to verified clinicians, trial investigators, biostatisticians, informatics leaders, and regulatory experts, so conversations aren’t opinion-driven - they’re structured around workflow reality, evidence requirements, and adoption constraints.

High-signal validation isn’t about pitching. It’s about walking through real workflows, quantifying pain, stress-testing willingness to switch, and uncovering blockers early - integration, liability, training, and policy friction.

When done right, these conversations produce consistent language, defensible assumptions, and reference-ready insights that hold up in front of investors.

This approach mirrors how regulated industries operate: define claims, document evidence, and prove reliability across settings. Even if you’re not building a drug or device, healthcare investors expect that discipline.

StudyConnect helps founders behave like regulated operators from day one - turning expert conversations into repeatable, credible validation, not anecdotes.

Get in touch with the team at StudyConnect at matthew@studyconnect.world to help you connect with the right medical advisor.