Common mistakes in Clinical Trials

Common mistakes in Clinical Trials: Ultimate Guide (Avoid 3–6 Month Delays)

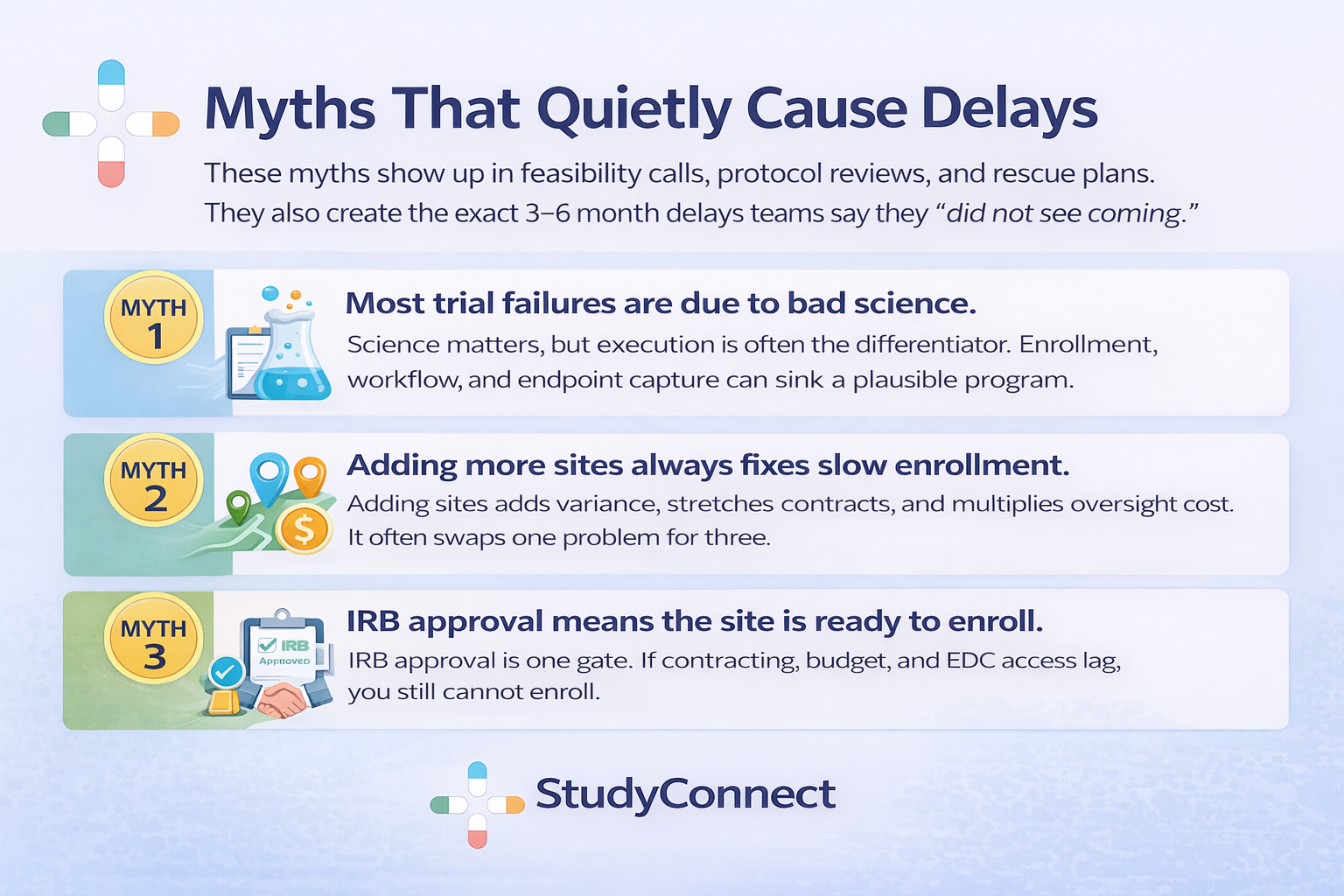

Clinical trials fail more often than most teams expect. And a painful number of failures are not “bad science” problems. They are process problems you can spot early and fix fast. This blog breaks the mistakes down by trial stage, with practical prevention steps.

Why clinical trials fail?

Many programs enter the clinic with strong preclinical logic and still collapse.

Across therapeutic areas, only about 5–14% of drug candidates make it from Phase I to approval, meaning around 90% never reach market (MedX DRG).

That attrition is not just biology. It is also execution.

Why this topic matters ?

Clinical trials are slow, expensive, and exposed to compounding friction. A “small” process miss can trigger amendments, re-consents, retraining, and rework.Here is the uncomfortable part: later-stage failures increasingly include non-scientific drivers.

In an analysis of Phase II/III terminations, enrollment issues were cited in nearly 18% of terminations with reasons provided, while strategic/business decisions were ~36% (Nature Reviews Drug Discovery).

That is why operational discipline is not “admin.” It is core strategy.

Process mistakes harm outcomes in four ways:

- Patient safety: unclear procedures, drifting eligibility, late safety reporting

- Data quality: inconsistent endpoint capture, missing data, avoidable queries

- Timelines: IRB cycles, contract stalls, slow recruitment, delayed database lock

- Regulatory risk: weak rationale, poor documentation, audit findings

I have repeatedly seen trials stumble because people thought they agreed on the objective, but they did not. In one study I was close to, the sponsor aimed for a strong clinical signal while operations optimized for speed. Endpoints were interpreted differently across sites, data became inconsistent, and we were forced into an amendment and partial rework after dozens of patients were enrolled. It was not a science failure. It was a failure to align on what “success” meant before first patient in.

How to use this blog ?

Each mistake below includes what it looks like, why it happens, impact, and a prevention step.

Use it like a checklist during study planning, and like a diagnostic tool once the trial is live.We will cover mistakes by stage:

- Planning (objectives, feasibility, risk)

- Protocol design (endpoints, eligibility, bias, stats)

- Site start-up (IRB/ethics, contracts, training, documentation)

- Trial conduct (recruitment, retention, deviations, clinical reasoning)

- Data, monitoring, quality (EDC to database lock)

- Ethics and compliance (GCP-ready, audit-ready)

Common mistakes in the Planning stage

Planning mistakes create downstream certainty. When assumptions are wrong at this stage, teams pay later through amendments, delays, and noisy or unusable data.

Mistake 1: Poorly defined trial objectives not aligned with regulators

Teams often write objectives that sound scientific but do not support a real regulatory or clinical decision. Decision-focused objectives are different from exploratory curiosity.In practice, this shows up as objectives that do not clearly map to a regulatory or clinical decision, heavy use of vague “we will explore…” language, and endpoints or analyses that cannot actually support the claim the team wants to make. These issues usually arise because teams prioritize speed or publication goals, regulatory thinking enters too late, and operations is not asked whether objectives are feasible to execute consistently across sites.The impact is significant: studies fail to hold up during regulatory interactions, protocols require amendments to “tighten” objectives, and sites collect endpoints inconsistently because they are unclear on what truly matters.Prevention: Sanity-check against ICH E6(R3) Good Clinical Practice principles and region expectations early (FDA/EU). Use the GUIDELINE FOR GOOD CLINICAL PRACTICE E6(R3) as a shared baseline. Hold a 60-minute objective alignment review with clinical, ops, data, biostats, and regulatory.

Mistake 2: Optimistic feasibility assessments and enrollment math

Feasibility is where timelines live or die.

And most teams still do feasibility using the wrong inputs.Recruitment delays are blamed for trial delays in about 80% of studies, and in many datasets ~80% of trials fail to hit targeted enrollment within the planned timeframe (Gitnux).

Even worse, ~48% of trial sites under-enroll, and ~85% of trials fail to retain enough participants without extensions (Gitnux).This usually looks like sites quoting prevalence instead of validating eligible charts, ignoring competing trials at the same institution, underestimating visit burden, and having no backup plan if the first few sites miss targets. The root causes are leadership pressure to commit to aggressive timelines, feasibility calls conducted only with investigators (not coordinators), and teams avoiding hard questions to preserve momentum.In real feasibility calls, sites often promise optimistic numbers without pushback. Once live, coordinators struggle, referrals drop, screening logs weaken, and dashboards stay “green” because averages hide site-level failure. By the time reality sets in, months are lost and the default rescue is “add more sites.”The impact includes under-enrollment, extensions, higher cost per patient, lower team morale, and selection bias as sites push borderline patients.Prevention: Requires chart-based validation of inclusion/exclusion criteria, explicit documentation of competing trials, coordinator-led scoring of visit burden (time, staffing, workflow, patient travel), and building a backup recruitment plan before first patient in.Early warning signals during planning include how many sites actually validated criteria against charts, how often core assumptions change week to week, and vague or inconsistent answers to where patients will realistically come from.

Mistake 3: Ignoring the biggest barrier — site capacity and friction

The biggest barrier to clinical trials is often not patients but site capacity: contracting speed, staffing turnover, clinic workflow, and competing priorities.This mistake appears when PI enthusiasm is treated as readiness, activation plans assume hospital legal and finance teams move quickly, and monitoring or data cleaning resources are under-budgeted. The result is start-up stalls, missed enrollment windows, slow query turnaround, declining retention due to poor patient experience, and growing risk at database lock.Prevention: Means building a most-likely plan rather than a best-case scenario, budgeting realistically for monitoring and proactive data cleaning, and adding contingency sites early instead of waiting for panic.

Common mistakes in Protocol design (endpoints, eligibility, bias, stats)

Protocol design is where “simple on paper” becomes “hard in clinics.” A protocol that ignores real workflows will generate deviations and missing data.

Mistake 1 :Having Vague Primary or Secondary Endpoints

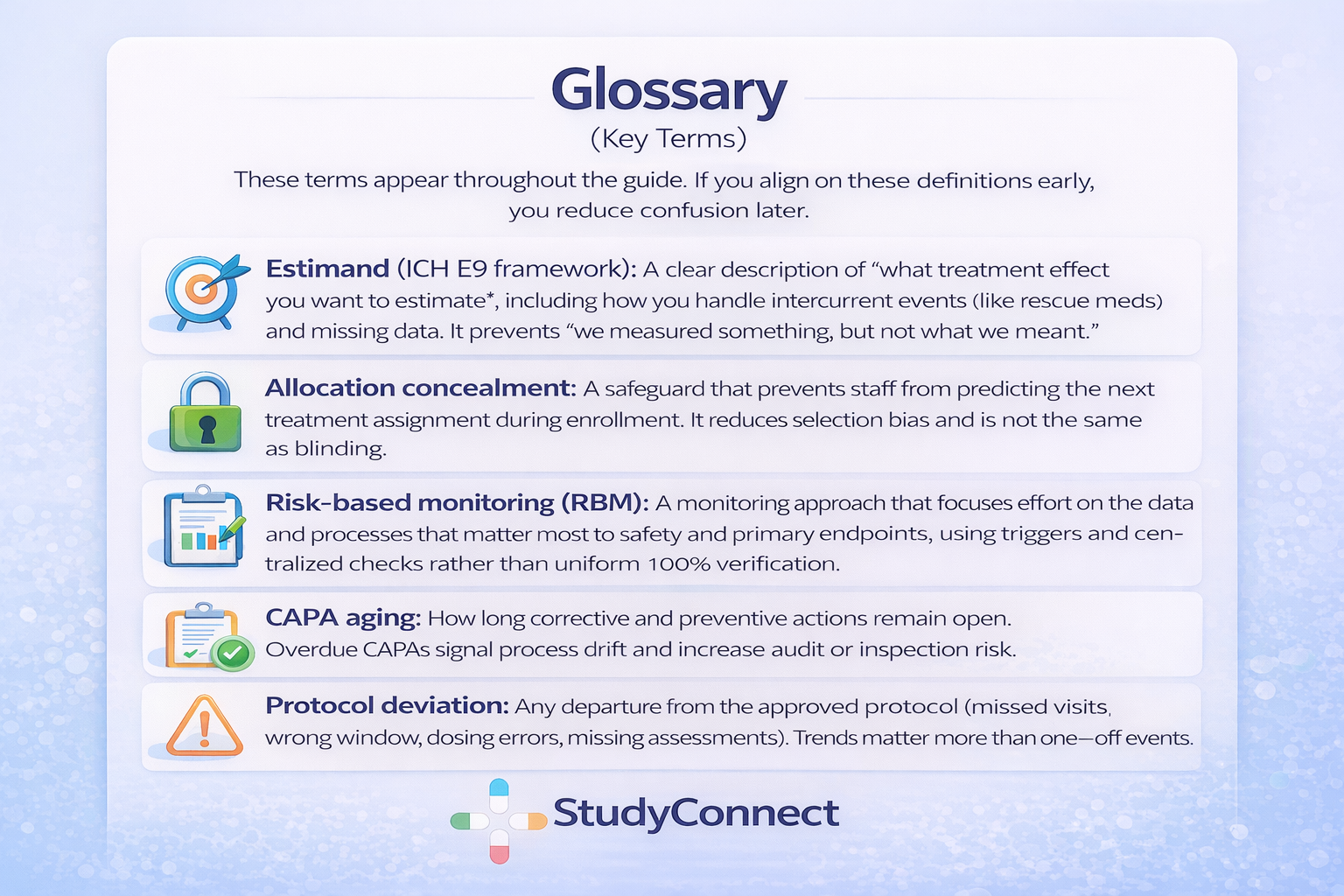

One common mistake is having vague primary or secondary endpoints and unclear estimands. Endpoints need to be measurable, consistent, and ready to support decision-making. When they are poorly defined, different sites end up interpreting them in their own way, which fragments the data and weakens the study.This usually shows up as endpoints that sound clinically meaningful but lack clear operational rules, multiple methods being used to measure the same outcome across sites, and missing data that was never anticipated—often because patients skip the most burdensome or complex assessments.These issues tend to arise because teams optimize protocols for publication language rather than operational clarity. Estimands and statistical assumptions are often not discussed early enough, and site coordinators or data managers—the people who understand what is actually feasible—are not consulted during protocol development.The impact can be significant. Sponsors may need to issue protocol amendments just to clarify how measurements should be taken. Missing data increases, conclusions become weaker, and regulators may push back with fundamental questions such as, “What exactly does this estimate represent?”Prevention: To prevent these problems, endpoints should be fully operationalized by specifying who measures them, when they are measured, which instrument is used, and what qualifies as a valid assessment. Endpoints should align with both clinical meaning and standard-of-care workflows. Feasibility should be confirmed with coordinators and CRAs before the protocol is finalized, and overall design should remain aligned with ICH E9 principles, particularly around estimands and handling intercurrent events.

Mistake 2 : Overcomplicated protocol (too many visits, procedures, exceptions)

Complex protocols often appear thorough on paper, but they frequently break down during execution. Excessive burden on sites and participants leads directly to protocol deviations, higher dropout rates, and slower enrollment.This typically shows up as long visit schedules filled with numerous “optional” procedures or exception cases, case report forms that are overly complex and poorly aligned with actual clinic workflows, and a pattern of many small deviations appearing early in the enrollment phase.These problems usually stem from scope creep driven by too many stakeholders, a mindset of “let’s collect it in case we need it,” and the absence of a pilot workflow review with site coordinators before finalizing the protocol.The impact is tangible and costly. Enrollment slows, participant retention drops, and data quality becomes inconsistent across sites. At the same time, monitoring efforts and data cleaning workloads increase sharply.Prevention: To prevent these issues, low-value data fields and procedures should be removed, visit schedules and window logic should be simplified, and a structured workflow review should be conducted with site coordinators and CRAs prior to protocol finalization.

- Use eCRF best practices and cross-functional review, as described in eCRF Design: Best Practices

Mistake 3 Unrealistic inclusion/exclusion criteria (screen failures climb)

Eligibility criteria ultimately define the reality of your recruitment. When those criteria are too narrow or unclear, screen failure rates rise quickly and recruitment becomes far more difficult than anticipated.This often becomes evident within the first few weeks, with high screen failure rates, frequent site-by-site debates over whether borderline patients qualify, and eligibility requirements that depend on data not routinely collected during standard clinical care.These issues usually arise because eligibility criteria are written without meaningful input from study coordinators, because the pursuit of a “clean” dataset takes priority over real-world feasibility, and because teams assume physicians will refer patients in a perfectly aligned and consistent way.The consequences are serious. Timelines are extended, expensive rescue strategies become necessary, selection bias can emerge as sites begin cherry-picking easier-to-qualify patients, and additional protocol amendments and retraining efforts are required.Tufts research noted that modifying eligibility criteria due to design changes or recruitment difficulty is a common driver of amendments, and nearly one-third to nearly half of amendments could have been avoided with better upfront planning (Springer / Tufts).

[add image for bias]

Common mistakes in Site start-up (IRB/ethics, contracts, training, documentation)

Start-up is where optimism meets institutional reality.

This is also where 3–6 month delays are most preventable.

Mistake: Underestimating IRB/ethics review complexity (3–6 month delays)

IRB delays are not rare. They are structural.

Protocols are often written for scientific peers, not ethics committees.What it looks like

- Multiple cycles of IRB queries and revisions

- Amendments after initial approval that reset review clocks

- Consent forms that are vague or hard to read

- Missing operational details: recruitment, data handling, safety follow-upBenchmark impact (seen in practice)

- IRB query cycles plus amendments can add 2–4 months to timelines

- Ongoing sponsor burn can run $50k–$150k per month during delays (expert benchmark from field experience)Prevention

- Use an IRB-ready checklist before submission

- Improve consent readability and remove jargon

- Include an “operational detail pack” (recruitment flow, data flow, safety reporting)

- Pre-review submissions with experienced coordinators and regulatory staff

- If using decentralized elements, align with IRB concerns about people, data integrity, and oversight using the MRCT Center guidance on IRB/EC considerations for DCT review

Mistake: Starting contracts and budgets too late (activation stalls)

Contracting is not a side task. It is a critical path.

If it starts late, everything else waits.What it looks like

- IRB approval achieved, but the site cannot activate

- Budget negotiations drag line-by-line

- Legal queues treat CTAs as low priorityTypical impact

- 2–5 months delay per site due to contracting and budget negotiation (expert benchmark from field experience)Prevention

- Start CTA and budget work early, in parallel with protocol finalization

- Standardize templates and fallback language

- Track “time untouched” weekly (days since last action)

- Escalate when one region stalls behind others without clear reasons

Mistake: Incomplete IRB submissions and missing operational details

Incomplete submissions create rework and lost momentum.

They also increase the chance of re-consent later.Common gaps

- Recruitment materials not ready or not aligned to consent

- Data handling and privacy language unclear or mismatched

- Safety reporting workflow not described

- Training plans not definedImpact

- Delayed first patient in (FPI)

- Rework across sites and inconsistent implementation

- Higher risk of IRB-required changes midstreamPrevention

- Use a submission package checklist

- Align recruitment plan, data flow, safety reporting, and training before submission

- Reference global best practice principles such as the WHO Guidance for best practices for clinical trials

Mistake: Documentation chaos (sponsor-CRO-site silos, no version control)

Documentation errors feel small until an audit makes them huge.

And silos make “truth” impossible to find.What it looks like

- Lost context in email threads

- Inconsistent versions of protocol, manuals, and training

- Documents written once and forgotten

- Manual updates that drift across teamsImpact

- Protocol deviations and inconsistent data capture

- Audit findings and inspection risk

- Retraining cycles and delayed cleaningPrevention

- Create a single source of truth for essential documents

- Enforce versioning rules and owners for each document

- Maintain a decision log: what changed, why, and whenOne practical resource for the human side of these mistakes is the discussion on are mistakes inevitable in clinical research, especially with data entry?.

- It reflects a real theme: mistakes happen, but systems determine whether they get caught early.

Common mistakes during Trial conduct (enrollment, retention, deviations, reasoning)

Conduct is where trial plans face real patients and real clinics.

Small misses here can turn into systematic bias.

Mistake: No real recruitment workflow (anonymized cardiology case + fix)

Recruitment is a workflow problem, not a motivation problem.

If workflow is wrong, reminders and pressure will not fix it.Anonymized Phase II cardiology story (real-world pattern) A multicenter Phase II cardiology trial aimed to enroll patients in a narrow window after discharge. Feasibility looked strong on paper. But the protocol was finalized before coordinators and discharge planners were involved.Patients were discharged within 24–36 hours, and research teams were notified too late to screen and consent. Enrollment stalled. After three months, the study was at <15% of projected enrollment.Exact fix used

- Clarified the eligibility window to allow pre-consent during inpatient stay - Embedded a screening checklist into the discharge workflow

- Gave coordinators standing permission to pre-screen EHRs daily

- Retrained sites using a step-by-step enrollment playbook

- Used expedited IRB review for the operational amendmentResult

- Enrollment tripled within 6 weeks - The sponsor avoided adding sites, likely saving several hundred thousand dollars and preventing an added ~6-month extension (field benchmark)

Mistake: Enrollment pushed over enrollment quality (screen failures, borderline patients)

Fast enrollment that breaks eligibility is not success.

It is hidden bias and future rework.What it looks like

- Rising screen failure rate

- “Creative interpretation” of criteria

- Differences in eligibility decisions across sitesWhy it happens

- Pressure to hit numbers

- Unclear criteria and no decision tree

- Weak coordinator trainingImpact

- Wasted screening cost and avoidable safety risk

- Selection bias and weaker endpoint credibility

- Increased amendments and monitoring findingsPrevention

- Weekly screen failure review with root cause notes

- Eligibility decision tree for edge cases

- Retrain early, before drift becomes culture

Errors in clinical reasoning (at sites and sponsor level)

Clinical reasoning errors can distort eligibility and safety reporting.

They also create inconsistent populations across sites.Common reasoning errors:

- Anchoring: locking onto the first diagnosis and missing alternatives

- Confirmation bias: collecting evidence to support eligibility, not test it

- Ignoring competing causes: misattributing symptoms to the target condition

- Over-reliance on referral: assuming clinicians will refer consistentlyPrevention

- Structured screening checklist tied to criteria definitions

- Second review for edge cases (PI + coordinator or medical monitor)

- Standard definitions in manuals and training

What can go wrong in clinical trials: deviations, retention drops, safety reporting delays

Most failures show early signals if you track them weekly.

You do not need to wait for a crisis.Common issues:

- Missed visits and out-of-window assessments

- Dosing errors and protocol drift

- AE/SAE under-reporting or late reporting

- Retention drops after burdensome visitsWeekly early warning signals (conduct)

- Deviation trends by type and by site

- Data entry delay (days from visit to entry)

- Site responsiveness latency

- Retention rate by visit number and procedure burdenFor additional lived experience from CRAs, the thread on “What’s the worst mistake you’ve made as a CRA?” highlights a consistent theme: small misses become big when documentation and escalation are weak.

Common mistakes in Data, monitoring, and quality (from entry to database lock)

Data quality does not start at database lock.

It starts when a coordinator reads the CRF and tries to map it to real care.

Mistake: Inconsistent data capture and CRFs that don’t match site reality

If the CRF does not match workflow, sites will improvise.

Improvisation becomes inconsistency, and inconsistency becomes queries.What it looks like

- Same field interpreted differently across sites

- Repeated queries on “obvious” items

- Source data that does not map cleanly to EDCWhy it happens

- CRFs built without coordinator input

- Definitions missing or unclear

- Training focuses on theory, not examplesImpact

- Longer cleaning cycles and delayed database lock

- Increased missing data

- Weaker endpoint interpretabilityPrevention

- CRF review with coordinators before final build

- Clear data definitions and completion examples in the manual

- Use interdepartmental review and QC/QA as recommended in eCRF Design: Best Practices

Mistake: Delayed data cleaning and lack of real-time monitoring

Late cleaning is expensive cleaning.

It also forces sites to reconstruct context months later.What it looks like

- Query backlogs

- “End-of-study cleaning sprint” culture

- Missing data discovered too late to recoverImpact

- Delayed database lock and delayed decisions

- Higher cost due to extended trial operations

- Increased risk that endpoints become uninterpretableEarly warning signals (data)

- Weekly query volume trend and repetitive query types

- Missing data patterns across sites

- Time from visit to entry increasing over timePrevention

- Weekly data review rhythm with clear owners

- RBM with centralized dashboards for timeliness and trends

- Trigger-based escalation when a site crosses thresholds

Mistake: Stats plan and analysis choices misaligned with protocol reality

SAP-protocol misalignment creates regulatory pain.

It also creates internal debate when timelines are tight.What it looks like

- Analysis assumptions broken by missing data or visit windows

- Post-hoc sensitivity analyses added under pressure

- “We cannot answer the question we asked” momentsImpact

- Uninterpretable results or reduced confidence

- Regulatory pushback and additional analyses

- Delays in reporting and submission readinessPrevention

- Tight alignment between protocol and SAP

- Plan for missing data early and define sensitivity analyses

- Keep analysis consistent with GCP and ICH principles (without over-complicating)

Common ethical and compliance mistakes (GCP-ready, audit-ready)

Ethics and compliance are not “paperwork.”

They are how you protect participants and make results credible.

Clinical trial ethical issues: consent, risk mitigation, privacy, vulnerable groups

Ethics issues cause delays because IRBs ask the right questions.

If you do not answer them upfront, you will answer them later under time pressure.Core issues to address clearly:

- Informed consent clarity and readability

- Minimizing burden and making procedures reasonable

- Fair participant selection and inclusion rationale

- Privacy and data handling, especially with remote tools

- Safety monitoring and who pays for injury or extra care (as applicable)Missing detail here often triggers IRB queries and can force re-consent later.

- If your study includes decentralized elements, use the MRCT Center DCT IRB/EC considerations to pre-empt predictable concerns: people, data integrity/security, and oversight.

Are pregnant women usually included in clinical trials for new drugs?

Often, pregnant women are excluded in early drug trials.

The main reason is fetal risk and unknown safety, especially when early human safety data is limited.Later in development, inclusion may happen with strict safeguards.

This can include enhanced monitoring, specialized consent language, and pregnancy exposure registries. The key is to plan the ethics and safety monitoring approach early, because it influences eligibility, consent, and AE reporting.

Mistake: Late compliance checks (CAPAs, TMF gaps, training drift)

Reactive compliance creates avoidable findings.

If you only fix issues after audits or monitoring visits, you are already behind.What it looks like

- Workarounds becoming “normal”

- Essential documents missing or expired in the eTMF

- Training drift over time, especially after staff turnover

- CAPAs opened but not closedAn experienced research professional summarized common documentation pitfalls clearly:

“Clinical trials rely on accurate, complete, and compliant documentation, but even small errors can lead to delays, compliance issues, or even study failures…

- Incomplete or inconsistent source documentation…

- Poor management of essential documents (eTMF issues)…

- Incorrect or missing delegation of authority logs (DOA)…

- Fix: checklists, training, automated reminders, regular QC audits, real-time updates.” — Inusa O (expert field insight)Prevention

- Routine QC and TMF completeness checks

- Weekly CAPA aging review and escalation rules

- Training refreshers tied to deviation/query trends

- Version control and document ownership enforced

What is CAPA aging (and what does “overdue CAPAs” signal to auditors)?

CAPA aging is the time a corrective or preventive action stays open.

It is a simple metric, but auditors care because it reflects whether you truly control your process.Overdue CAPAs usually signal one of three issues:

- The root cause was not understood, so the fix is unclear

- Ownership is weak, so actions do not get completed

- The organization tolerates drift and relies on heroicsA weekly CAPA aging review is one of the fastest ways to stay inspection-ready.

- It turns compliance from a reaction into a system.

Device trials callout: what changes under ISO 14155 and EU MDR

Device trials add specific expectations that drug teams sometimes underestimate.

If you are running device or combination studies, plan for these early.Practical differences to account for:

- Stronger linkage to risk management and benefit-risk documentation

- Device accountability and traceability (including training on use)

- Usability and human factors considerations tied to outcomes

- Vigilance reporting expectations and timelines

- EU MDR clinical evaluation context and evidence planning

- Alignment with ISO 14155 principles for device clinical investigationThis is not about adding paperwork.

- It is about preventing predictable regulatory friction later.

How to improve clinical trials: a stage-by-stage prevention system

A prevention system beats a rescue plan.

The easiest time to save 3–6 months is before the first patient is enrolled.

Weekly early warning dashboard (signals by stage)

Most teams track milestones. Few track friction.

A weekly dashboard should show latency, churn, and quality signals.Copy-ready template (simple version):Planning

- Sites validated I/E against charts:

# validated / # targeted - Competing trials documented:

yes/noby site - Assumption changes this week:

count + descriptionProtocol - Revision churn:

# major changes this week - Ops feedback share:

% of comments from coordinators/CRAs/data - “TBD” items open:

countStart-up - Contract “time untouched” (median days)

- Budget “time untouched” (median days)

- IRB query cycles per site (count)

- Speed variance: fastest vs slowest site (days)Conduct

- Screen failure rate trend (weekly)

- Enrollment vs plan (by site)

- Deviations per subject and per site

- Data entry lag (days from visit to entry)Data

- Query volume trend (weekly)

- Repetitive queries (top 5 fields)

- Missing data patterns (top variables)Compliance

- CAPA aging (median days open; overdue count)

- TMF completeness % (critical documents)

- Training completion and refresh statusThis comes straight from a practical idea: track “latency” everywhere.

- When response times stretch week over week, delays are already forming.

Why do 90% of clinical trials fail? The practical view

The “90% fail” statistic is real in the drug development funnel.

Industry-wide, roughly 90% of drug candidates entering clinical testing never reach approval, with phase-specific failure rates often cited as ~30–40% in Phase I, ~70–80% in Phase II, and ~40–50% in Phase III before regulatory review (MedX DRG).But the practical reasons are often preventable:

- Recruitment shortfalls and retention problems (common and persistent)

- Operational burden and workflow mismatch

- Endpoints that are not measurable consistently

- Data quality issues and late cleaning

- Funding/time overruns

- Ethics and regulatory friction due to missing details

- Strategic/business reprioritization (often tied to weak execution signals)Remember the termination data: enrollment issues cited in ~18% of Phase II/III terminations with reasons provided, and strategic/business decisions at ~36% (Nature Reviews Drug Discovery).

- Process and planning matter because they shape those decisions.

What are the steps in conducting clinical trials for new drugs (and where mistakes happen)

Clinical trials follow a familiar path. The same mistakes show up at predictable points.

- Planning / feasibility - Mistakes: optimistic enrollment math, no backup plan, unclear objectives

- Protocol + SAP design - Mistakes: vague endpoints, unclear estimands, overly complex schedules, narrow I/E

- Site selection + IRB/ethics + contracts - Mistakes: incomplete submissions, late contracting, IRB cycles not planned

- Site activation (training, EDC access, materials) - Mistakes: IRB approved but not activation-ready, training drift begins

- Enrollment + conduct - Mistakes: no recruitment workflow, screen failures rising, protocol drift

- Monitoring + quality management - Mistakes: slow issue escalation, weak RBM triggers, repeated deviations

- Data cleaning + database lock - Mistakes: delayed cleaning, query backlogs, systemic missingness

- Analysis + reporting - Mistakes: SAP misaligned to protocol reality, weak sensitivity planningIf you want a deeper list of design pitfalls, the article on Top Five Mistakes in Clinical Protocol Design covers common failure patterns like too many objectives and missing interdisciplinary input. Use it as a cross-check, not as a template.

How StudyConnect can reduce preventable mistakes (expert access early)

Many avoidable trial mistakes come from building in a silo.

Teams make assumptions about clinics, coordinators, endpoints, and recruitment flow without fast access to the right people.StudyConnect helps teams talk to verified clinicians, researchers, and trial-experienced experts early, so feasibility and workflow are tested before they become protocol text.

The practical value is reducing guesswork in:

- Feasibility assumptions (chart reality, patient flow, competing trials)

- Endpoint measurability (what can be captured consistently)

- Real workflow design (screening, consent, discharge timing, retention)

- Early compliance readiness (documentation expectations and common gaps)This is not about replacing CROs or IRBs.

- It is about reducing preventable surprises before they cost months.

Copy-paste checklists (downloadable-style) before you launch

Checklists prevent “we will fix it later” thinking.

Use these before final protocol sign-off and before first site activation.

Checklist 1: Planning + Protocol/Design (feasibility, endpoints, bias, SAP)

- Objectives are decision-focused and regulator-aware (no vague “explore” goals)

- Primary endpoint is operationalized (who/when/how measured)

- Estimand is discussed and aligned between protocol and SAP

- Inclusion/exclusion validated against recent charts at shortlisted sites

- Competing trials assessed and documented per site

- Visit burden reviewed with coordinators (time, travel, staffing)

- Recruitment plan is written as a workflow, not a hope

- Backup recruitment plan exists (extra sites/referral/DCT elements)

- Bias controls defined (randomization, allocation concealment, blinding/assessment)

- SAP assumptions match protocol realities (windows, missingness, intercurrent events)

- No “TBD” items remain in critical sections (“no TBDs” rule)

- Protocol reviewed by ops, data management, and QA (not only scientists)

Checklist 2: Start-up + Conduct + Data (IRB, contracts, training, monitoring)

- IRB submission pack complete: protocol, consent, recruitment, privacy, data flow

- Consent readability checked and operational details included

- Safety reporting workflow clear (AE/SAE timelines, responsibilities)

- CTA and budget started early; templates standardized

- Weekly “time untouched” tracked for contracts, budgets, and IRB

- Training completed and documented; refresh triggers defined

- Delegation of Authority logs verified and kept current

- Version control rules enforced; single source of truth is live

- Enrollment workflow documented (screening, referral, consent timing)

- Screen log plan in place; weekly screen failure review scheduled

- Deviation review is weekly with root cause and retraining actions

- Data entry timeliness targets set; dashboards monitored weekly

- Query management cadence defined; repetitive queries tracked

- RBM plan defined with triggers, escalation, and centralized checks

- TMF completeness checks scheduled; CAPA aging reviewed weekly

FAQ: quick answers to common clinical trial problems

Clear answers reduce debate and speed up decisions.

These are common questions teams ask when timelines slip.

What are the types of errors in clinical trials?

- Planning errors: unclear objectives, unrealistic feasibility, no risk plan

- Design errors: vague endpoints, narrow I/E, overcomplex schedule, weak bias controls

- Operational/start-up errors: incomplete IRB submission, late contracting, training gaps

- Conduct errors: weak recruitment workflow, protocol drift, retention failures

- Data/analysis errors: inconsistent CRFs, late cleaning, SAP misalignment

- Ethical/compliance errors: consent gaps, privacy issues, TMF/CAPA driftEvery category affects patient safety and data credibility.

- That is why these errors are not “small.”

How to avoid bias in clinical trials (simple rules that work)

- Randomize properly and protect allocation concealment

- Blind where possible; if not, blind outcome assessment

- Standardize assessments and train with examples

- Build a real retention plan to reduce attrition bias

- Pre-specify outcomes and analysis; document deviations clearly

- Report transparently and keep version control tight

What can go wrong in clinical trials and how can clinical trials be improved?

Most problems are predictable: recruitment shortfalls, IRB cycles, contract stalls, deviations, missing data, and late compliance fixes.

Improvement levers are also predictable: realistic feasibility, IRB-ready protocols, early contracting, workflow-first recruitment, real-time data oversight, and proactive compliance.If you want a broad research-oriented list of common research mistakes, see Fifteen common mistakes encountered in clinical research.

For a compliance-oriented perspective, the video 5 Clinical Trial Mistakes That Can Ruin Your Reputation is a useful reminder that noncompliance findings are not rare, and reputational damage is real