Will AI Replace Doctors? The Real Impact of AI on Doctors

AI will change medicine fast. But it will not “fire” doctors in bulk.What will happen first is quieter: less typing, more screening, more alerts, and more checking of AI outputs.And the final responsibility still stays with a human clinician.People ask this because AI now sounds certain, and headlines sound louder than hospitals.The main worry is not only jobs. It is safety and trust.Many patients already feel uneasy about AI in clinical decisions. In a Pew Research Center survey, 60% of U.S. adults said they would feel uncomfortable if their provider relied on AI to diagnose disease and recommend treatments (39% comfortable) (Pew Research Center, Feb 22, 2023).That tracks with other surveys too. A press-release summary of a ModMed patient survey reported 55% were uncomfortable with AI for diagnosis (Business Wire). And another dataset summarized by athenahealth says only 37% of patients are comfortable with AI diagnosing conditions (athenahealth).Trust is the real bottleneck. A nationally representative survey press release from the Society for Risk Analysis reports only about 1 in 6 (17%) trust AI as much as a human expert to diagnose health problems (Society for Risk Analysis).

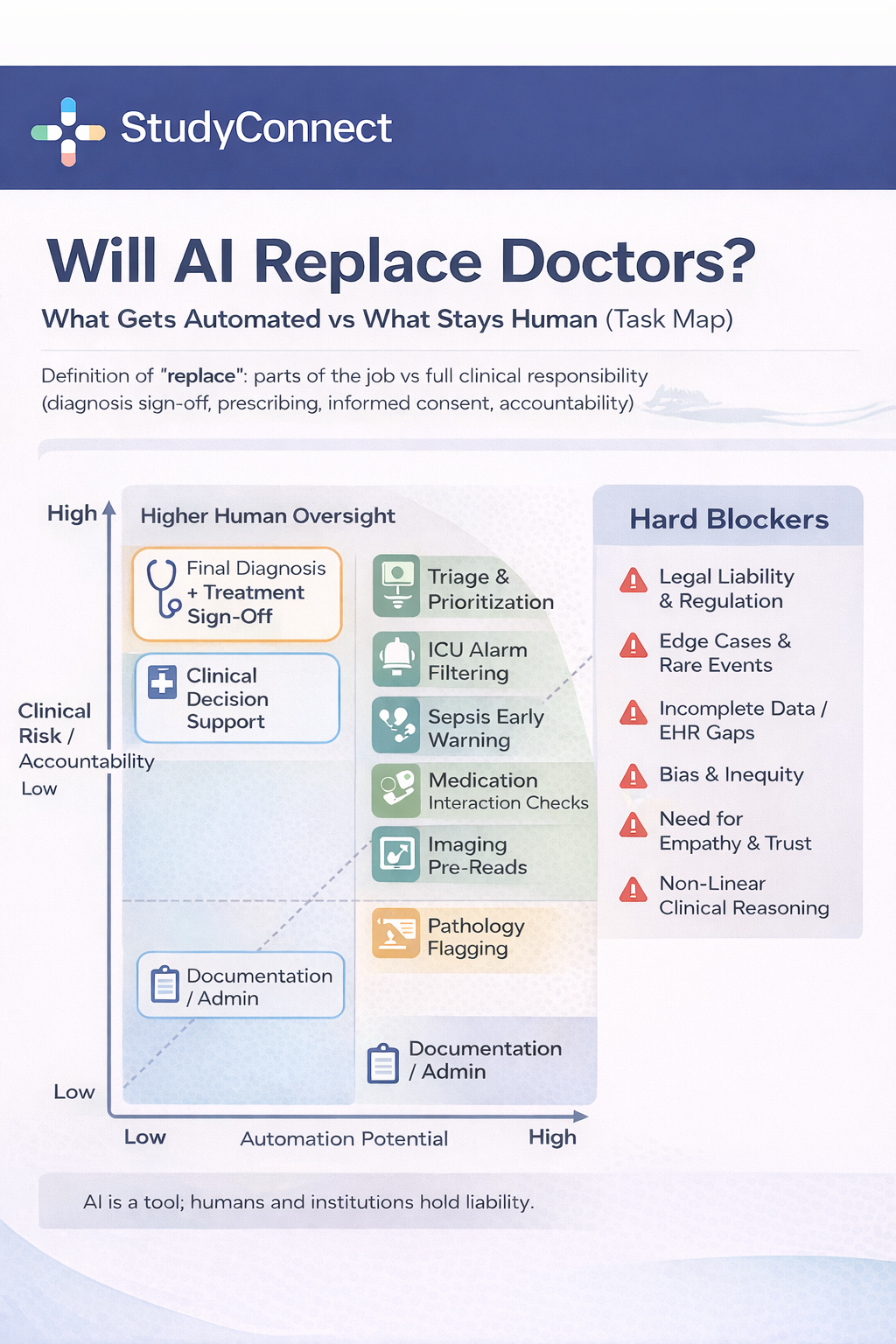

“Replace” is not one thing. It can mean:

- Replace a single task (write notes, flag abnormal results)

- Replace part of a workflow (triage, pre-reading images)

- Replace a whole role (rare and mostly administrative roles)

- Replace a whole specialty (very unlikely)

The setting matters too:

- ER: time pressure, messy symptoms, high liability

- Clinic: broad complaints, long history, shared decisions

- ICU: monitoring, alarms, rapid deterioration

- Home care: remote monitoring, adherence, escalation rules

Doctors will use AI the way they use labs, imaging, and guidelines: as input, not as the legal decision-maker. The most likely future is doctors + AI, because someone must collect context, examine the patient, weigh trade-offs, get consent, and take responsibility when things go wrong.This matches what clinician-innovators keep saying. Shez Partovi (healthcare innovator and ex-neuroradiologist) put it plainly: “Do I think doctors are going to be out of a job? Not at all.” Harvard Health’s public stance is similar: “Will artificial intelligence replace my doctor? Not in my lifetime, fortunately!”

The next decade looks like this:

Likely in 5–10 years -

- Documentation support and coding support

- Inbox sorting and draft replies

- Imaging “second reader” and triage flags

- ICU monitoring support and alarm reduction

- Operational forecasting (beds, staffing, flow)

Unlikely in 5–10 years -

- AI independently diagnosing complex cases end-to-end

- AI choosing treatment without clinician sign-off

- AI handling end-of-life decisions

- AI being the accountable party for harm

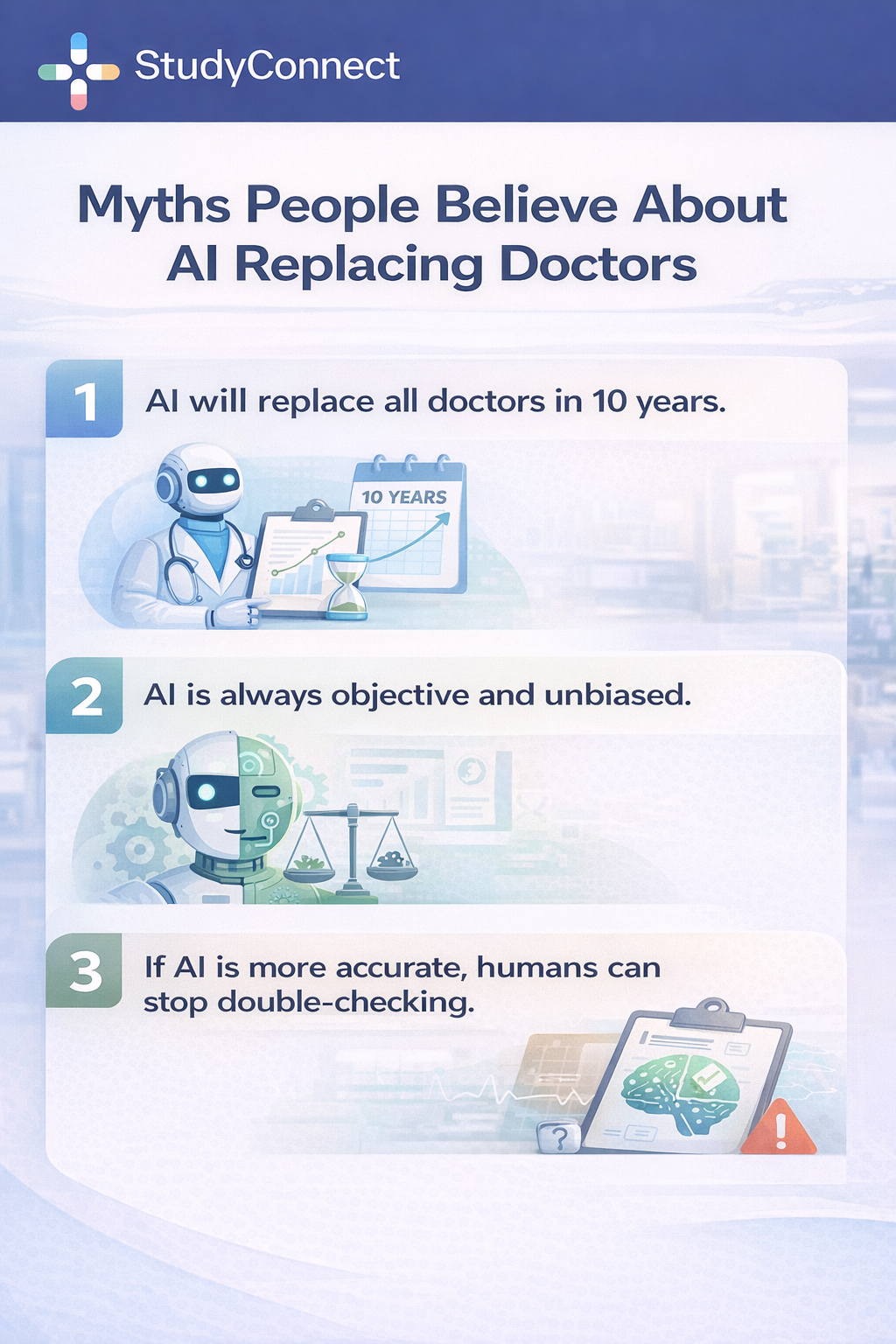

If you are searching “will AI replace doctors in 10 years,” the best honest answer is: AI will change daily practice, but not remove the need for physicians as accountable decision-makers.Public predictions are often framed as certainty.Bill Gates has said AI will replace many doctors and teachers within 10 years and that humans may not be needed “for most things.” This makes attention, but it skips hospital reality: accountability, regulation, messy data, and patient trust.Other well-known claims show the same pattern. Kai-Fu Lee has spoken about robots replacing large portions of jobs. Vinod Khosla predicted machines will substitute a large share of doctors. Geoffrey Hinton famously suggested we should stop training radiologists.

These views often underestimate:

- Liability: someone must be responsible

- Regulation: clearance, monitoring, audits

- Trust: patients are not ready for AI-only care

- Workflow reality: integration failures can erase model benefits

You can also see public confusion in everyday discussions. For example, this Reddit thread asks whether “ChatGPT Health” could reduce GP visits and replace basic care (Reddit discussion). The instinct is understandable, but it is not how safe clinical deployment works.

Where AI already helps in healthcare

AI works best where data is repetitive and outcomes can be checked.

1. Imaging support: Radiology and Pathology

AI is already used as a “pre-read” in many workflows.One common example is lung nodule detection on CT. Studies and real deployments report around 94% sensitivity for pulmonary nodule detection, and some workflows show ~30% faster interpretation because the model pre-flags suspicious findings for the radiologist (often as FDA-cleared tools in narrow tasks).Pathology is similar. Digital pathology AI can flag suspicious regions and improve consistency across large slide volumes. In my experience watching clinical teams trial these tools, the model is most useful when it reduces “search time,” not when it tries to be the final judge.

The key rule remains: AI highlights; the physician signs off.

2. Autonomous screening in narrow cases

This is one of the clearest examples of “narrow autonomy.” Autonomous diabetic retinopathy screening tools in the IDx-DR category have shown approximately 87% sensitivity and around 90% specificity for referable diabetic retinopathy in FDA-clearance–style studies and real-world deployments. The practical advantage lies in speed and access: screening can happen directly in a primary care clinic, results can be available the same day, and patients who need a specialist can be referred more quickly. Still, the scope remains narrow. These systems operate within an established care pathway with follow-up care, rather than functioning as standalone medicine.

What is autonomous screening (and how is it different from clinical decision support)?

Autonomous screening means the AI system can produce a screening result under a strict protocol for a specific condition, within a defined population, and it is closer to “test-like” behavior than “doctor-like” behavior. Clinical decision support (CDS) is different: CDS tools do not “decide.” Instead, they flag risk, suggest options, summarize data, and remind clinicians about guidelines. In both cases, the real safety question is scope. Autonomous screening can be safe when it is narrow, well validated, and paired with clear referral steps, while CDS can be helpful across many areas but is easier to misuse if people begin to treat suggestions like orders.

3. Early warning and risk prediction (sepsis, deterioration)

In hospitals, timing is everything.Sepsis and deterioration models continuously monitor vitals, labs, and trends to flag patients at risk. Many systems report alerts 4–6 hours earlier than traditional rule-based triggers, which can create a meaningful window to assess the patient sooner.Expert commentary also points to real deployments where a “sepsis watch” approach was associated with sizable mortality reductions (often cited around nearly 30% in some reports). The important detail is not the exact percentage. It is the mechanism: earlier attention and faster escalation.

The safety line is clear: AI prompts attention, not automatic treatment.

What is sepsis early-warning (predictive deterioration alerts) in hospitals?

A sepsis early-warning system is a predictive model that monitors incoming clinical data and produces a risk signal. It is designed to help care teams notice patient deterioration earlier than humans can, especially during busy shifts. These systems usually do not administer antibiotics or fluids; instead, they typically trigger an alert, suggest a checklist or evaluation, and help prioritize clinician attention. This is why they can improve care without “replacing” clinicians. The model functions like a radar, while the clinical team still interprets the signal, examines the patient, orders tests, and delivers treatment.

4. Documentation and admin automation (notes, coding, prior auth)

This is where AI can save the most time without touching clinical judgment.

Many pilots report:

- 30–40% less documentation time

- 1–2 hours saved per doctor per day

- Up to ~50% less after-hours charting (“pajama time”)

We also have a hard clinical publication with measured time change. In a JAMA Network Open evaluation of an ambient AI documentation platform (implemented April 2024; analyzed May–Sep 2024), mean time in notes per appointment decreased from 6.2 to 5.3 minutes (about 0.9 minutes saved per visit, P < .001) (JAMA Network Open). The study also reported significant improvements in cognitive load measures (NASA-TLX subscales) for note-writing mental demand, rushed pace, and effort (all P < .001).

This matches what many clinicians say privately: the first win is not “AI diagnosing.” It is “AI giving me my evening back.”

5. Medication safety checks (interactions, dosing flags)

Medication safety is a natural fit for context-aware checking. AI-supported medication systems can flag drug interactions, catch contraindications, and highlight dosing risks in elderly and ICU settings. Reported impacts vary, but some systems report 20–40% reductions in medication errors after implementing stronger checking and decision support. The main risk is alert fatigue, where too many low-quality warnings end up being ignored.

6. Nursing workflow support (alarm filtering and monitoring)

This section also answers the search query “will AI replace nurses.” In ICUs, traditional monitors create huge alarm volumes and many are false. AI-driven alarm filtering systems can reduce false alarms by up to ~70%, which reduces alarm fatigue and helps nurses focus on true emergencies.But it does not replace bedside nursing. It reduces noise so humans can act faster.

7. Hospital operations

AI also helps behind the scenes. Common uses include predicting admissions and discharges, forecasting bed availability, making staffing recommendations for surge periods, and reducing emergency department boarding and wait times. Patients experience this as shorter waits and fewer “no bed available” delays. It is not glamorous, but it is real.

What AI cannot do ?

AI is strong at patterns. Medicine is more than patterns.Real diagnosis is iterative. The story changes as new facts appear.A classic example from clinical storytelling is the “weird exposure” problem. Sometimes the key detail is not in the chart. It is in the patient’s life: travel, work, a new supplement, a chemical exposure, a family stressor, or something they did not think mattered.This is why medicine is non-linear. You try, observe, revise, and sometimes reverse course. AI can help list possibilities. But it struggles with rare, context-heavy cases where the right question has not been asked yet.

1 . Human trust and empathy (doctor-patient relationship)

Care includes consent, ethics, and difficult conversations such as breaking bad news, end-of-life planning, shared decisions under uncertainty, and understanding a patient’s values and risk tolerance. Even if AI were statistically accurate, many patients do not want AI-only care. Trust data shows this clearly: 60% of people report being uncomfortable with AI-based diagnosis and treatment recommendations, according to Pew Research Center. (Pew Research Center).

2. Physical care and hands-on skills (bedside and emergency actions)

Digital advice is not physical action. Doctors and nurses perform physical exams, procedures, emergency airway support, and rapid bedside reassessment when a patient crashes. Robotics can assist, but robots today are not autonomous clinicians; they are advanced instruments under human control. A blunt example makes this clear: an algorithm cannot perform the Heimlich maneuver, place a central line when anatomy is difficult, or manage a chaotic trauma room alone.

3. Legal accountability: Who is responsible when AI is wrong?

Today, AI is not a legal decision-maker. In most systems, primary liability rests with the clinician who signs the order or note. Shared liability can fall on the hospital or clinic if they deploy unsafe or unvalidated AI or fail to train staff properly. Vendor liability exists but is limited, though it is growing when claims are false or safety limitations are hidden. A practical rule many clinicians follow is simple: if you cannot justify the decision without the AI, do not follow the AI. This legal structure is one of the biggest blockers to full replacement.

The World Health Organization has raised governance concerns about large multi-modal models in health, including limits of “general-purpose foundation models” and the need for oversight and responsible deployment. A useful reference is the WHO guidance: Ethics and governance of artificial intelligence for health: Guidance on large multi-modal models.

Will AI replace Radiologists, Surgeons, and Nurses?

Different specialties face different levels of automation pressure.

Radiology and Surgery: Why AI Is Augmenting, Not Replacing Clinicians

Radiology is often cited as the strongest case for AI replacing doctors, largely because image recognition is one of AI’s best skills. In practice, however, the shift is not toward “read by AI” but “read with AI.” AI tools can flag findings and speed up routine work, but radiologists provide essential context by comparing prior scans, integrating clinical history, and handling ambiguity, artifacts, and unusual cases. They also remain fully accountable for the final report. The real change is a redistribution of work: more focus on complex cases, quality control, protocol optimization, safety oversight, and, in some settings, more patient-facing communication.

Surgery raises similar fears, often driven by misunderstandings about surgical robots. These systems are tools, not automated surgeons. Near-term AI support in surgery includes video review and skill feedback, guidance prompts and checklist timing, and identifying missed details in surgical footage, as noted by clinicians such as Mitchell Goldenberg. Full automation is blocked by patient-specific anatomy, unexpected complications like bleeding, real-time judgment under uncertainty, and unresolved issues around liability and consent. Surgery is not just technical execution; it is decision-making under pressure.

Across both fields, AI is changing how clinicians work, not replacing them. Responsibility, judgment, and accountability remain human, while AI supports narrow, well-defined tasks where it performs best.

Nursing: bedside care, coordination, and human presence

Questions about whether AI will replace nurses miss the nature of nursing work. AI can support nursing by reducing false alarms - by as much as 70% in some ICU alarm-filtering systems—helping with documentation drafts, and monitoring trends and early warning signals. But AI cannot replace hands-on care, rapid bedside prioritization, patient advocacy, family communication, or real-time judgment during emergencies. Nursing is physical, relational, and continuous. AI is not.

Primary care and emergency care: context-heavy medicine

Generalist medicine is among the hardest areas to automate. Primary care and emergency medicine deal with broad, non-specific symptoms, incomplete histories, multi-morbidity and medication complexity, social and mental health factors, and shared decision-making under uncertainty. AI can help by summarizing information and supporting checklists, but full replacement is least realistic in these settings. For anyone asking “how will AI affect doctors” or “how will AI affect medicine,” the most accurate answer is that AI will standardize and speed up parts of care, while making human judgment, context, and communication even more valuable.

What Actually Changes for Doctors

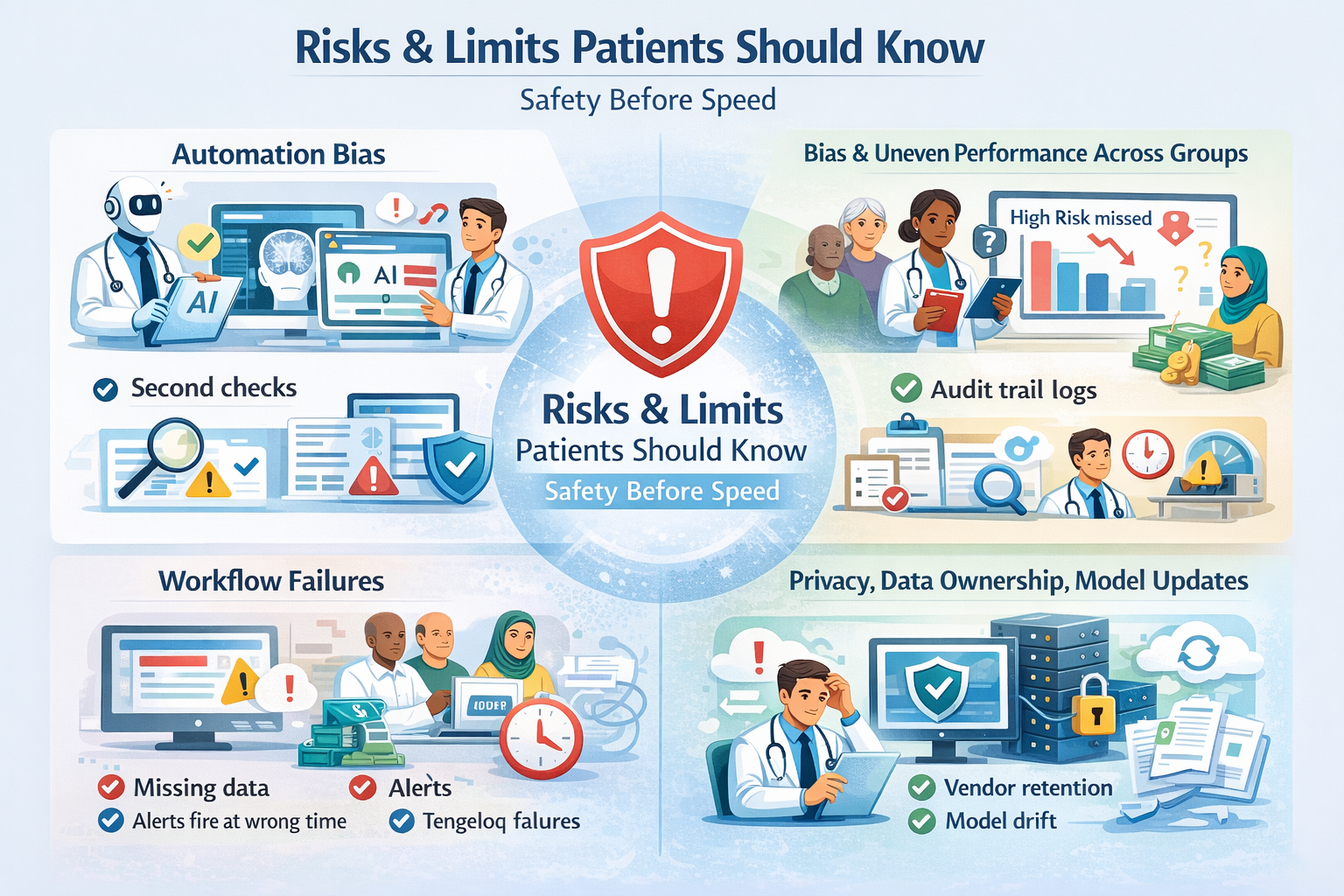

AI won’t replace doctors, but it will change how their time is spent. The first wave of impact is focused on administrative and support tasks rather than clinical authority. Tools are already being used to draft documentation, suggest billing and coding, prepare prior authorization packets, sort inbox messages, support triage decisions, flag findings in imaging, and surface guideline reminders or care gaps. In all of these cases, AI helps organize information and reduce friction, but the final decision remains with the clinician.AI also creates new kinds of work. Automation doesn’t remove responsibility; it shifts it. Doctors increasingly need to review AI outputs, document when they override them, report tool failures and near misses, watch for performance drops or model drift, and explain AI-assisted decisions to patients in plain language. In real clinical settings, a significant amount of effort goes into safe use — training teams, defining policies, and handling exceptions - often more than teams initially expect.

Some aspects of medicine will never be fully automated because they are human by design. Complex judgment in ambiguous cases, ethical trade-offs, informed consent, trust-building, empathy-driven care, accountability when harm occurs, and hands-on emergencies all require human presence and responsibility. These are not edge cases; they are the core of clinical practice. The real change is not whether doctors are needed, but how they work. AI can assist, but clinicians decide - and accountability stays human.

How to use AI safely ?

Safe AI is not about believing the model. It is about controlling the system.

Use this checklist before adoption:

- Validated outcomes: evidence shows it improves real outcomes, not only accuracy

- Right population: tested on patients like yours, not a different hospital or country

- Clear scope: what it does and does not do is written and enforced

- Uncertainty display: shows confidence and flags “I do not know”

- Easy override: clinicians can ignore it without friction

- Logging: every output is recorded for auditing

- Monitoring: ongoing performance tracking and bias checks

- Training: staff trained for automation bias and failure modes

- Escalation plan: what to do when it fails or conflicts with clinical judgment

Regulation and oversight basics (FDA-style approvals, governance)

In plain terms: regulated clinical AI is treated like software as a medical device.In the United States, many tools go through FDA pathways. A useful reference for what is already authorized is the FDA’s resource: Artificial Intelligence-Enabled Medical Devices.In the UK and other settings, evidence frameworks are used to decide whether digital health tools have enough proof for adoption. One example is NICE’s Evidence standards framework for digital health technologies.Strong oversight is one reason full replacement is unlikely soon. Approvals, audits, and post-market monitoring slow down unsafe autonomy.

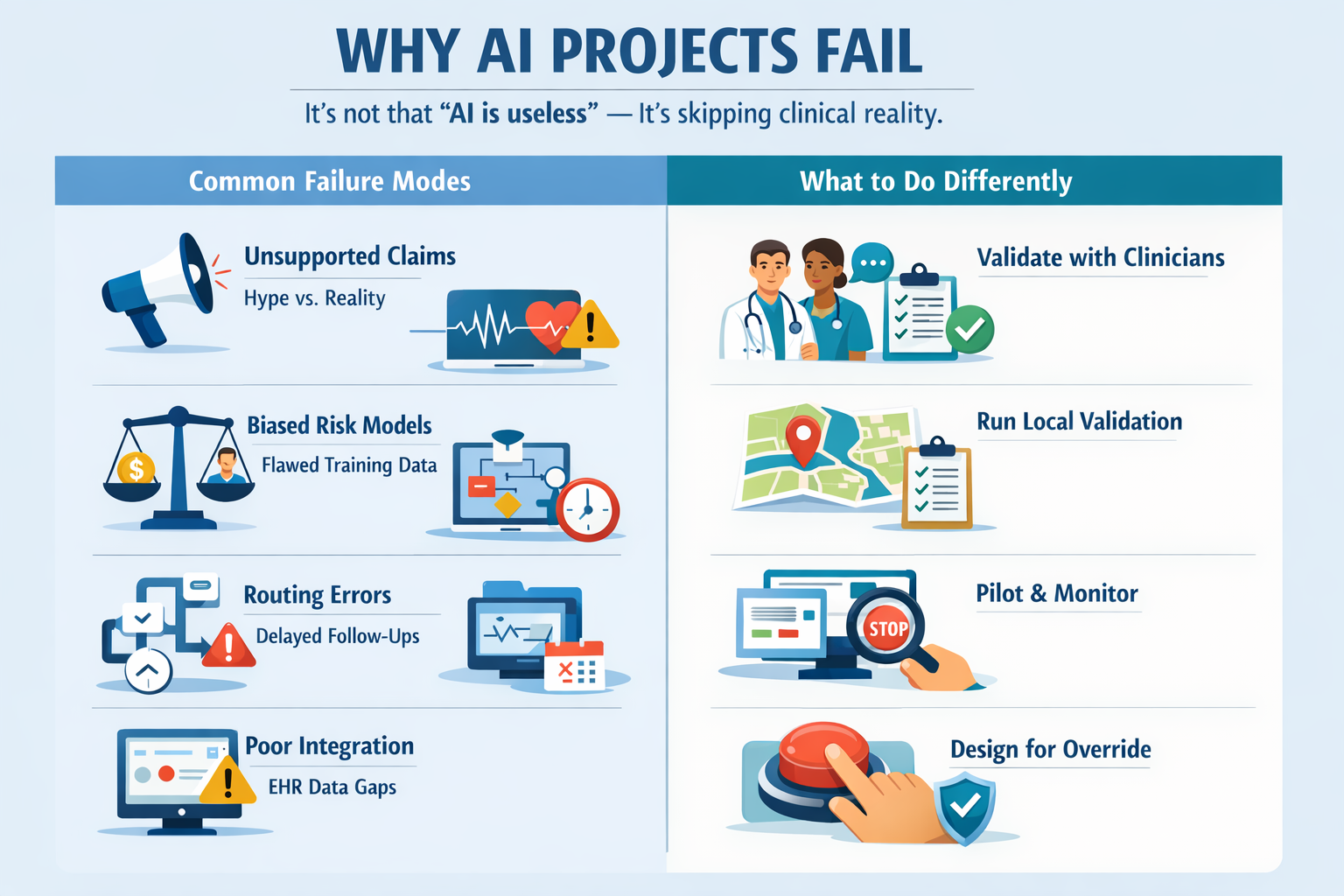

Prerequisites for Using Clinical AI (Building or Buying)

You don’t need a PhD to evaluate clinical AI — but you do need the basics. Whether you’re a clinician, health system, or startup, success starts with clarity, governance, and realistic expectations.For clinicians and health systems, this means having a clearly defined clinical problem and a measurable outcome. Reliable data access and a real integration plan matter more than model sophistication. Governance cannot be optional: someone must own monitoring, updates, and incident response. Legal review is also essential, especially around liability and informed consent.For founders and startups, focus wins. Strong clinical AI starts with a narrow use case that fits cleanly into an existing workflow. You need an evidence plan that goes beyond demos — retrospective analysis, prospective validation, and real-world monitoring. Regulatory pathways should be understood early, and clinician validation should happen before too much is built.The teams that succeed treat AI as infrastructure, not magic.

Skills That Matter More for Doctors in an AI World

The most durable clinical skills remain human, but they are strengthened by AI literacy. Clinical reasoning, differential diagnosis, and communication don’t disappear — they become more important. So do ethics, informed consent under uncertainty, and systems thinking across workflows and handoffs.

Doctors also need practical AI fluency: understanding model limits, bias, automation bias, and drift — and knowing when not to use a tool. The rule that holds up in practice is simple: use AI to remove clerical load, not to outsource responsibility.

What Patients Should Expect from AI-Assisted Care

AI in healthcare is usually quiet, not dramatic. Patients may notice faster documentation, more standardized screening prompts, additional alerts or reminders, and more structured follow-up planning.Patients should feel empowered to ask basic questions: Was AI used in this decision? What data did it rely on? Who reviewed the output? What are its limits? What happens if the clinician disagrees with it? If these questions can’t be answered clearly, the system isn’t mature.

Why Clinicians Still Decide & How Founders Should Build

Clinicians remain the decision-makers because they carry the duty, the risk, and the accountability. AI tools should be built to support that reality, not fight it.The strongest products assist rather than replace. They show uncertainty, fit cleanly into EHR workflows, make overrides easy and logged, and include monitoring hooks for drift and bias. They also produce documentation that supports audits and liability review.This is where many generic or freelance-driven solutions fall short. You can hire talent, but clinical correctness depends on domain expertise, validation design, and real-world workflow understanding.

How StudyConnect helps teams build safer AI

Many AI products fail because teams validate late.

StudyConnect is designed to help healthtech teams talk to the right experts early:

- Verified clinicians and medical researchers

- Feedback on clinical feasibility and workflow fit

- Bias risks and population coverage gaps

- Trial design and deployment constraints

- Practical regulatory expectations and safety monitoring

This reduces guesswork before you build, fundraise, or run a study. It also helps teams avoid preventable workflow failures that damage trust.

Have a query reachout at support@studyconnect.org.

Is AI going to replace doctors or make doctors redundant?

AI is going to replace some tasks that doctors do. It is not going to make doctors redundant.The core blockers are accountability and trust. Patients still prefer human oversight, and clinicians remain legally responsible for decisions. That is why “AI-only medicine” is rare and narrow, while “AI-assisted medicine” is spreading.If you want to explore how the public is thinking about this, these discussions are useful signals of confusion and expectations:

- Reddit: doctors replaced by AI in 10–20 years?

- Research: Investigating whether AI will replace human physicians

- YouTube: Doctoring in the age of automated diagnosis

The future is not AI versus doctors. It is doctors working with AI. AI will take more of the repetitive load: documentation, screening support, monitoring, and workflow optimization. The parts that remain human are the ones that define medicine: judgment under uncertainty, physical care, trust, and responsibility.