How is AI used in Healthcare : Facts & Emerging Trends

AI in healthcare is not a future idea anymore. It is already in hospitals, inside EHRs, and in daily clinical workflows. This guide shows where AI is used today, what works in real deployments, what fails in production, and how rules in the US and EU shape what you can safely build.

AI is spreading fast because healthcare is under pressure. Clinicians have more data than time. And systems are looking for ways to reduce delays without reducing safety.In 2024, 71% of U.S. hospitals reported using predictive AI integrated into their EHRs up from 66% in 2023 **Source**

This article breaks down where AI is used, what works, what fails, and what founders should do differently.

Why healthcare is using AI now ?

Hospitals are dealing with rising patient demand, more chronic disease, and staffing gaps. Imaging volumes keep growing. So does inbox work, documentation, and billing complexity. AI is often adopted for one reason: to reduce workflow friction in places where time matters.Adoption is also uneven. Large urban hospitals are far ahead. Small and rural hospitals are often behind.One report notes large urban hospitals can reach 80–90% AI usage, while small independent or rural hospitals often stay below 50%. It also notes about 80% of hospitals use vendor-supplied AI modules bundled with leading EHRs **Source**

And clinicians are already using AI tools directly. An AMA survey summary reported 66% of U.S. physicians were using AI tools in their practice by 2024, described as a 78% increase from 2023 **Source**

At the organization level, another industry summary claims 86% of healthcare organizations report using AI extensively by 2025 and 94% view it as core to operations. **Source**

What AI means in healthcare ?

AI in healthcare means software that learns patterns from data and helps people make decisions or complete tasks faster. It can read text, interpret images, and predict risk. But AI is not a clinician. It does not carry legal responsibility. And it does not understand patients the way humans do.I

n real hospitals, AI is mostly used as support:

- It flags urgent cases.

- It suggests risk scores.

- It drafts documentation.

- It helps staff route work.

Even when the model looks “smart,” the safest deployments keep a human in charge.

Types of AI

ML, NLP, Computer Vision, Generative AI, Agentic AI

You will see the same few AI types repeated across most products:

Machine Learning (ML): Learns from data to predict outcomes (readmission risk, sepsis risk, no-show risk).

Natural Language Processing (NLP): Reads clinical text (notes, discharge summaries, pathology reports) to extract meaning.

Computer Vision: Reads images (X-ray, CT, MRI, ultrasound) to detect patterns and measure structures.

Generative AI: Produces text (draft notes, patient messages, summaries) and sometimes code or structured outputs.

Agentic AI: Plans and executes multi-step tasks (schedule → gather forms → submit prior auth → follow up), with strict safety limits needed.

Where AI is used today:

AI is used where work is repetitive, time-sensitive, or data-heavy. The strongest use cases fit into existing workflow steps. Below are the main workflow buckets, with concrete examples and limits

Clinical care: Imaging, Triage, Risk Prediction, and Surgery support

Clinical AI works best when it targets a narrow decision point and produces an actionable output.

Medical imaging and diagnostics

What it does: Detects findings or measures anatomy on X-rays, CT, MRI, and ultrasound.

Example scenario: AI helps quantify cardiac function on MRI or flags suspicious nodules.

Value: Faster reads and more consistent measurements.

Limits: Site variation (scanner protocols) and dataset mismatch can reduce accuracy.

Urgent imaging triage (Aidoc)

What it does: Analyzes imaging and flags urgent findings for prioritization.

Example scenario: A radiology worklist is reordered so critical hemorrhage or pulmonary embolism is reviewed sooner.

Value: Reduces backlog risk and speeds urgent review in busy radiology services.

Limits: False positives can create alert fatigue; tight workflow integration is essential.

Adoption proof point: Used in more than 900 hospitals and imaging centers, as cited in expert guidance.

Learn more: Aidoc website (aidoc.com).

Stroke workflow alerts (Viz.ai)

What it does: Detects suspected large vessel occlusion on imaging and alerts stroke teams.

Example scenario: Faster escalation to a specialist and quicker transfer decisions.

Value: Time saved in stroke care can significantly reduce disability risk.

Limits: Requires strong clinical governance to avoid over-reliance on alerts.

Proof points: Approximately 31 minutes faster treatment on average, as cited in expert Q&A, and deployed across more than 1,600 hospitals.

Learn more: Viz.ai website (viz.ai).

Early disease detection from ECGs and scans

What it does: Identifies subtle signals that clinicians may miss in early stages.

Example scenario: Hidden heart disease patterns in ECGs or early cancer indicators in imaging.

Value: Earlier detection can shift care upstream and improve outcomes.

Limits: Requires careful validation and subgroup testing to avoid biased misses.

Robotic and AI-assisted surgery

What it does: Supports precision, planning, and consistency in surgical workflows.

Example scenario: Guidance overlays, motion scaling, or real-time detection of anatomy boundaries.

Value: Can reduce variability and support complex procedures.

Limits: Hardware constraints, training burden, and unclear reimbursement in some settings.

Operations: documentation, AI scribe, Coding, Scheduling, Billing, and EHR support

Operational AI is often the fastest to adopt because the objective is straightforward: reduce administrative burden.In 2023–2024, the fastest-growing predictive AI use cases in hospitals were operational rather than diagnostic.

According to HealthIT.gov, hospital predictive AI usage increased significantly from 2023 to 2024 in the following areas:

- Billing simplification and automation increased by 25 percentage points.

- Scheduling facilitation increased by 16 percentage points.

- Identifying high-risk outpatients for follow-up care increased by 9 percentage points.

Source: HealthIT.gov data brief on hospital trends in predictive AI use (2023–2024). Key operational use cases include:

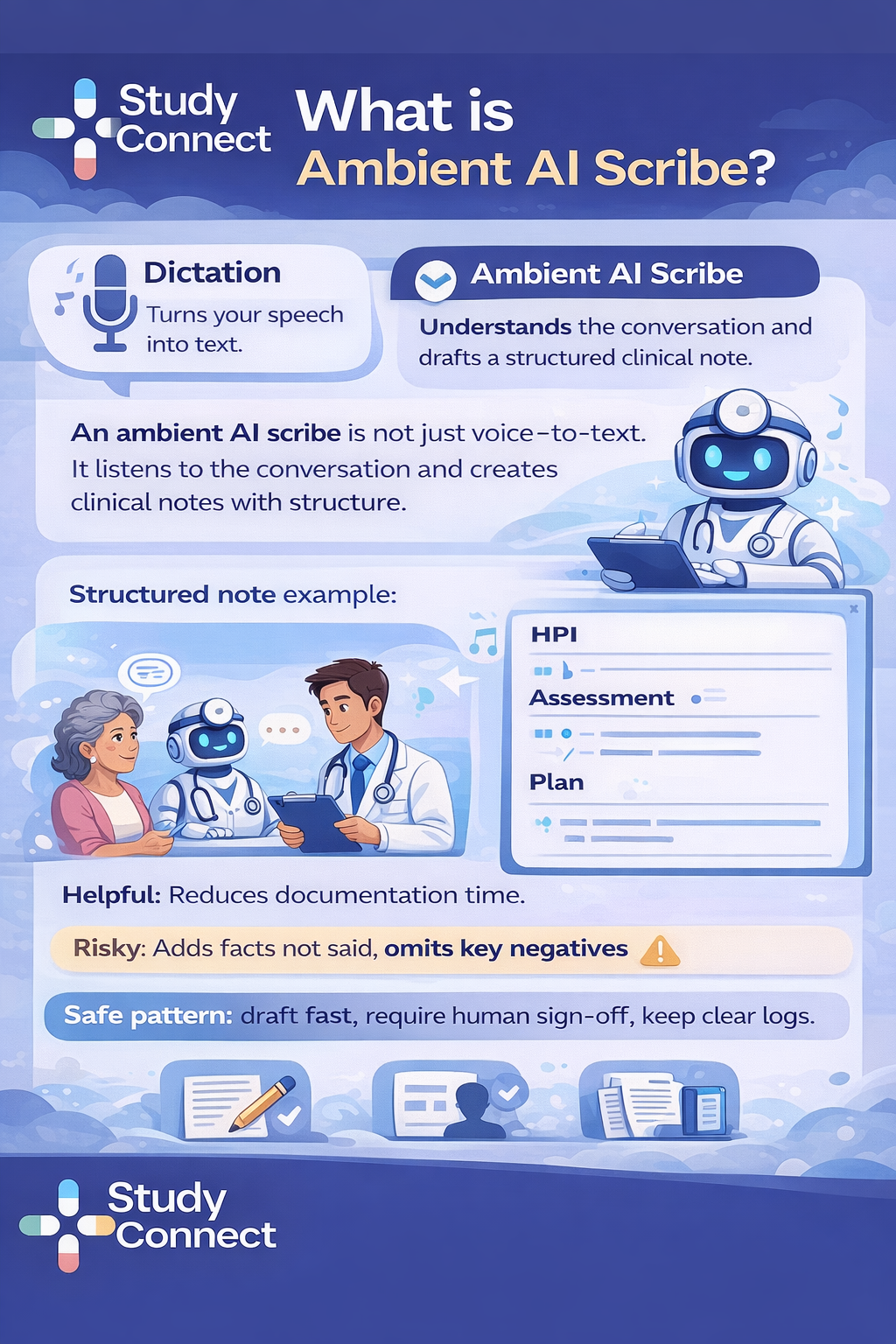

Ambient documentation and AI scribe tools

What it does: Listens to a clinical visit, drafts a note, and fills structured EHR fields.

Value: Reduces after-hours charting and speeds note completion.

Limits: Risk of hallucinated details, missing context, and privacy concerns if audio capture is poorly controlled.

Coding and billing support using NLP and rules

What it does: Suggests billing codes, flags missing documentation, and reduces claim errors.

Value: Fewer denials and less rework.

Limits: Incorrect suggestions can introduce compliance risk.

Scheduling optimization

What it does: Predicts no-shows, matches appointment slots, and balances clinic capacity.

Value: Improved utilization and shorter wait times.

Limits: Over-optimization can worsen access for complex or high-need patients.

Bed management and throughput

What it does: Predicts discharge likelihood and future bed demand.

Value: Better staffing decisions and smoother patient flow.

Limits: Prediction alone is not a plan; hospitals still require strong change management.

EHR assistance (search, summarization, routing)

What it does: Finds key labs, summarizes patient history, and drafts handoffs.

Value: Faster decision-making in data-heavy clinical charts.

Limits: Summaries can omit critical details; audit trails and clinician review are essential.

Research and Clinical Trials: Matching, Monitoring, Data Cleaning, and Document Automation

AI is already practical in trials, especially where work is repetitive and rule-based. The biggest wins are often in speed and data quality.

Common real uses today:

- Patient recruitment and matching from EHRs (eligibility screening at scale).

- Protocol optimization using historical and real-world data to reduce delays.

- Data management and cleaning (error detection, missingness checks).

- Real-time monitoring for safety signals and adverse event patterns.

- Predictive analytics for enrollment pace and trial success risk.

- Document automation for protocols, reports, and regulatory writing.

What is emerging but not scaled:

- Fully autonomous trial workflows (site setup → scheduling → monitoring)

- Digital twins for trial simulation

- Federated learning across institutions

- LLM-driven adaptive trial decisions

If you want a grounded view of what practitioners see, the thread How are AI tools actually being used in healthcare? is a useful reality check.

Patient-facing and Virtual Care: Chatbots, Remote Monitoring, and Virtual Wards

Patient-facing AI can expand access, but it needs strict safety boundaries. It should support navigation and escalation, not replace clinical judgment.

Chatbots and symptom checkers

Example scenario: Pre-visit intake, medication reminders, basic triage prompts.

Value: Faster access and less call-center load.

Limits: Must escalate red flags to humans and avoid “diagnosing.”

Care navigation and appointment prep

Example scenario: “Here is how to prepare for colonoscopy” or “here are your next steps after discharge.”

Value: Better adherence and fewer avoidable errors.

Limits: Must be personalized carefully and protect PHI.

Remote monitoring and virtual wards

Example scenario: Wearable + home vitals trigger alerts when trends worsen.

Value: Earlier escalation and potential reduction in readmissions.

Limits: Alert fatigue, device accuracy problems, and privacy risk.A broader global view is covered in **[7 ways AI is transforming healthcare](https://www.weforum.org/stories/2025/08/ai-transforming-global-health/)**, including access gaps and workforce constraints.

What works vs what fails in real deployments ?

Some AI tools scale to hundreds or thousands of hospitals. Many do not survive beyond pilots. The difference is rarely “model quality alone.” It is workflow fit, integration, and governance.

What works: patterns from proven tools (Viz.ai, Aidoc, imaging automation)

The best pattern is simple: time-critical problem, measurable outcome, human oversight, and tight integration.What repeatedly works in production:

- **Clear time-sensitive workflow** (stroke, urgent imaging, critical findings)

- **Metric you can measure** (minutes saved, time-to-alert, turnaround time)

- **Human-in-the-loop** (AI flags, humans decide)

- **Strong integration** (PACS/EHR/worklists; no extra clicks)

- **Lifecycle monitoring** (drift checks and performance audits)

Proof points you can anchor on:

- Viz.ai reduced time to treatment by ~31 minutes on average.

- Viz.ai is deployed across 1,600+ hospitals

- Aidoc is used in 900+ hospitals and imaging centers .

- Arterys Cardio AI is reported to automate cardiac MRI segmentation up to ~18× faster than manual processe

You can see more context via the **[Arterys (now part of Tempus) ecosystem](https://www.arterys.com/)** and imaging AI landscape updates via the **[Butterfly Network site](https://www.butterflynetwork.com/)**.

If you want to verify what is authorized in the US, the [FDA AI/ML-Enabled Medical Devices Database] is one of the best starting points. It helps teams understand which claims have precedent and where regulators draw lines.

What fails: 5 common failure modes & Why they happen ?

Most failures are predictable. You can often spot them before the first pilot ends.

1) Workflow misfit AI may be accurate but not usable. If it adds steps or arrives too late, clinicians ignore it. A qualitative study of hospital stakeholders found many AI initiatives focused on innovation or knowledge generation without a clear plan for real implementation after proof-of-concept, slowing adoption in practice (study text provided in your brief).

2) Poor data quality and EHR integration Healthcare data is fragmented and messy. Missing fields and inconsistent coding break models. The EHR research review highlights how missingness and heterogeneity can create bias and error if not handled carefully ([PMC article](https://pmc.ncbi.nlm.nih.gov/articles/PMC10938158/)).

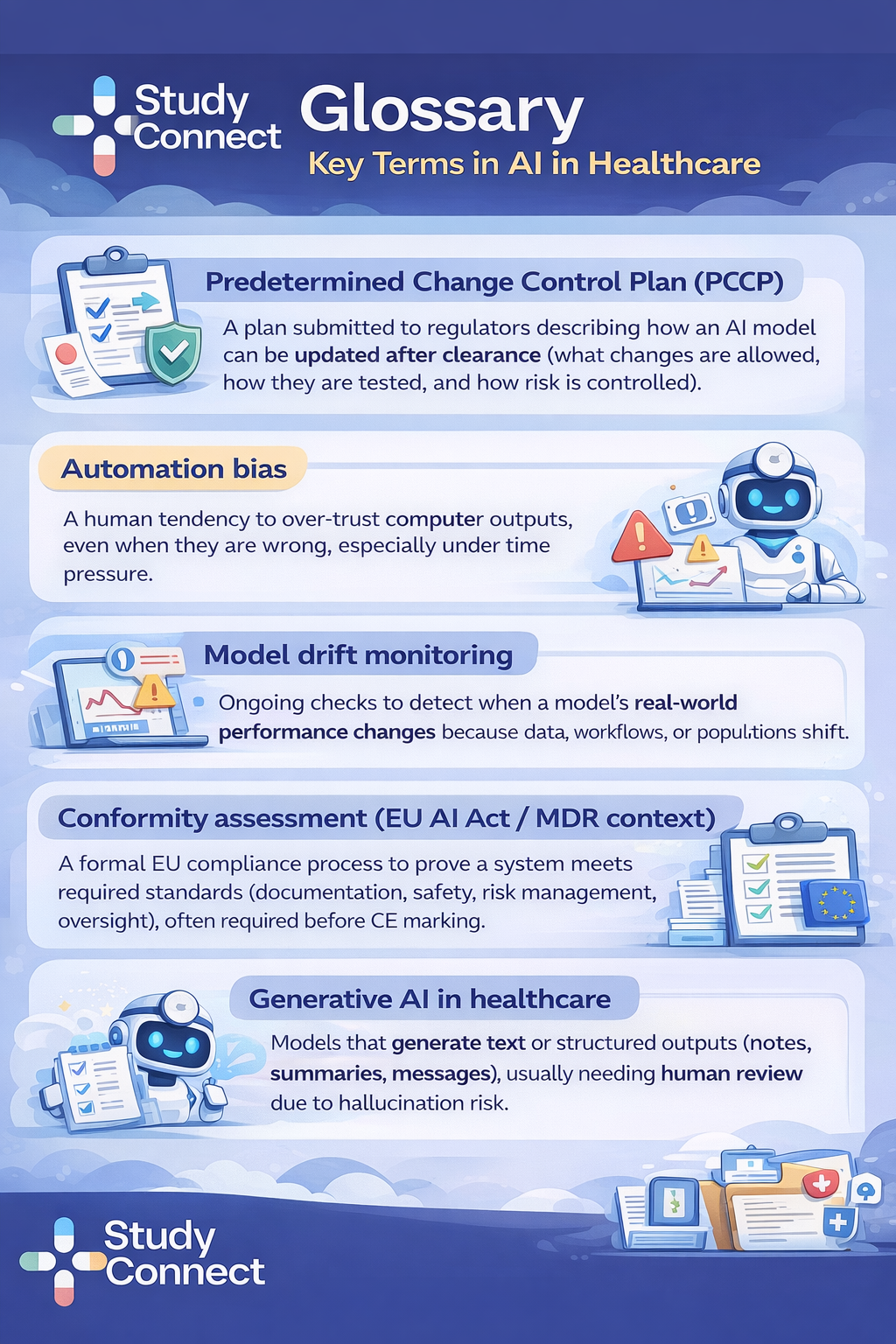

3) Automation bias / over-reliance People can over-trust confident outputs, especially under stress. This can cause unnecessary escalations or missed corrections if uncertainty is not shown.

4) Bias and poor generalization Models trained on limited populations can underperform in different sites and groups. That can worsen disparities and destroy clinician trust fast.

5) Insufficient clinical validation A model tested on curated datasets can fail in the real world. Prospective evaluation and site-specific calibration are often missing, especially in early-stage products.To keep your safety thinking sharp, it helps to follow real patient safety cases. The **[AHRQ Patient Safety Network (PSNet)](https://psnet.ahrq.gov/)** is a strong resource for how errors happen in real care environments.

Quick red flags checklist (before you scale past a pilot)

If you see these gaps, slow down before rollout.

- Output is not actionable (“interesting score” but no action)

- No clear owner (who responds to the alert?)

- No baseline metric (cannot prove improvement)

- Integration requires extra steps outside the normal workflow

- No monitoring plan (no drift, no uptime, no audit logs)

- No subgroup performance testing (bias unknown)

- No escalation path (what happens when AI is unsure?)

- No downtime plan (what do users do when AI fails?)

- No training (users misinterpret outputs)

- No post-launch audit cadence (no learning loop)

Challenges of AI in healthcare:

Even when a model works, adoption can still fail. Hospitals move slowly for reasons that often make sense.Common blockers:

- Fragmented data across systems and sites

- Weak interoperability and slow EHR integration cycles

- Procurement and security review timelines

- Clinical governance requirements (who approves, who monitors)

- Training and change management burden

- Unclear reimbursement pathways for some AI-enabled careThis is why “bundle AI inside the EHR” has been a big adoption driver.

As noted earlier, about 80% of hospitals report using vendor-supplied AI modules bundled with major EHR systems ([Intuition Labs PDF](https://intuitionlabs.ai/pdfs/ai-in-hospitals-2025-adoption-trends-statistics.pdf)).

Regulation and trust:

Regulation does not just slow teams down. It defines what you can claim, how you can update models, and what evidence you need. If you ignore this, your rollout plan will break later.

United States: FDA SaMD, 510(k)/PMA, PCCP updates, and lifecycle monitoringIf your AI is used for diagnosis, treatment decisions, or clinical decision support that impacts care, it may be regulated as Software as a Medical Device (SaMD).

Practical steps to think through:

1. Define the claim: What does the product do, and what action does it drive?

2.Decide if it is SaMD: Does it directly support diagnosis/treatment, or is it operational?

3. Pick the pathway: Many tools use 510(k) clearance (substantial equivalence). Higher-risk tools may need **PMA**.

4. Plan updates: If your model will change over time, you need a controlled update plan.

5. Monitor in production: FDA thinking emphasizes lifecycle oversight, not only pre-market testing.

A practical tool here is the [FDA AI/ML-Enabled Medical Devices Database]

It gives teams a real view of the device landscape and what kinds of AI claims have been authorized.

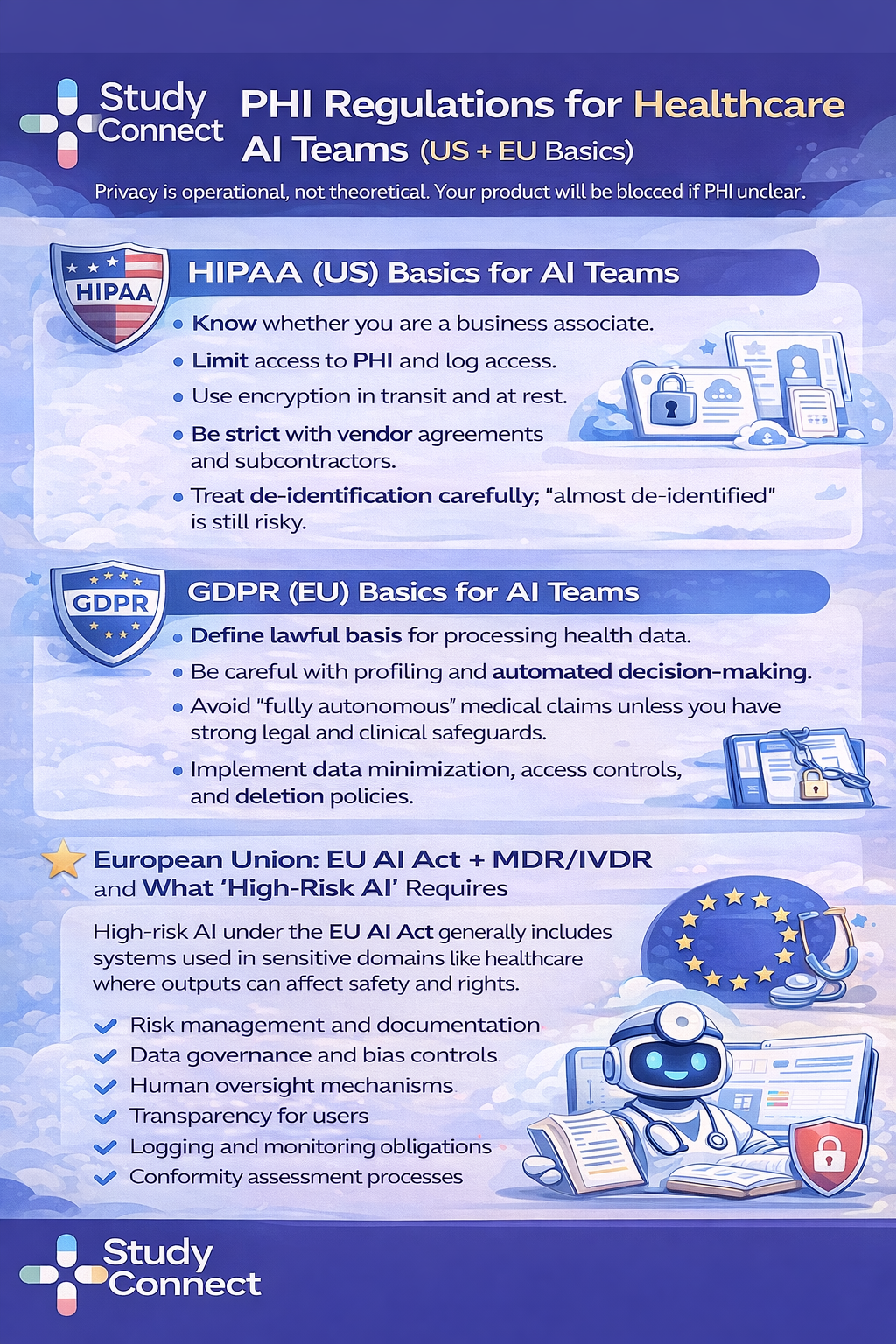

Privacy and data rules: HIPAA (US) and GDPR (EU) basics for AI teams

Future of AI in healthcare: What is next ?

The future will include more generative and agentic systems. But healthcare will still demand controls. The question is not “can it be built?” but “can it be trusted in practice?

Generative AI in healthcare: documentation, patient messaging, and review support

Generative AI is useful when it drafts and summarizes. It is risky when it decides.

Helpful uses:

- Drafting visit notes and discharge summaries

- Summarizing long charts for review

- Drafting patient-friendly instructions

- Drafting trial documents (with expert review)

Risky uses:

- Making diagnoses without clinician oversight

- Writing medication changes automatically

- Sending patient messages without review in sensitive cases

Guardrails that are becoming standard:

- Retrieval with sources (RAG)

- Internal citations to record elements

- Red teaming and safety testing

- Human sign-off before clinical actions

Agentic AI in healthcare:

Task automation across workflows (with safety limits)Agentic AI systems plan and execute multi-step workflows. In healthcare, this can be powerful for operations. But it must operate with strict permissions and approvals.

Good candidate tasks:

- Scheduling sequences (book → remind → reschedule)

- Prior authorization preparation (collect docs → draft submission → route approval)

- Trial operations follow-ups (notify sites → track responses)Non-negotiables:

- Permission boundaries (what the agent can and cannot do)

- Human approvals for clinical or financial decisions

- Audit trails for each step

- Safe fallbacks and downtime plans

Emerging but not scaled

These areas are promising but not standard in daily care yet.

Digital twins

- Promise: Simulate patient response to treatments.

- Why not scaled: Validation burden, cost, and data access issues.

Personalized treatment planning

- Promise: Tailor care using genetics, history, lifestyle.

- Why not scaled: Infrastructure gaps and unclear reimbursement.

Federated learning

- Promise: Learn across institutions without sharing raw data.

- Why not scaled: Operational complexity and governance.

Trial-specific emerging areas:

- Autonomous trial workflows

- Adaptive protocol decisions driven by LLMs

- Bias-aware subgroup outcome prediction

What founders should do differently

Founders often start with a model. Hospitals start with a workflow. The fastest path is to build around the workflow and prove measurable impact.

Start with a workflow map, not a model (demand-pull over tech-push) . A reliable build process is simple:

1. Pick one narrow decision point (one moment in the day).

2. Define the user (radiologist, nurse, scheduler, trial coordinator).

3. Define the action (what changes when the AI triggers?).

4. Define the metric (minutes saved, fewer denials, faster escalation).

5. Design the human-in-the-loop (who approves and who owns it?).

6. Plan integration early (EHR, PACS, worklists).

This directly addresses the failure pattern reported in hospital interviews: Teams often create knowledge or proof-of-concepts without a clear path to practical implementation and adoption.

Operationalize trust before scaling

Trust needs operating discipline.

Pre-launch:

- Validation on realistic data (not only curated sets)

- Calibration at each site

- Subgroup performance checks

- Clinician training and usability testing

Post-launch:

- Drift monitoring dashboards

- Alert fatigue tracking

- Regular audits of false positives and false negatives

Incident response playbooks (clinical + privacy/security)

- Scheduled reviews with clinical owners

Use experts early to avoid expensive mistakes (clinical, trial, and regulatory)I have repeatedly seen teams lose months because they validated the wrong problem. Or they built the right model for the wrong workflow. Early conversations with clinicians, trial investigators, and regulatory experts reduce that risk.

StudyConnect helps founders and healthtech teams find verified clinicians, researchers, and trial specialists early, so they can test assumptions before building or scaling. It is also a practical way to pressure-test evidence plans, workflow fit, and safety boundaries without relying on random outreach channels.

Get in touch with the team at StudyConnect at matthew@studyconnect.world to help you connect with the right medical advisor.

Final takeaway for founders:

When AI works best in healthcareAI works best when it removes friction in a clear workflow and keeps humans accountable. If you treat it as a product feature instead of a clinical system, it will fail.

The 4-part rule:

- Reduce friction in a real workflow step

- Support experts instead of replacing them

- Respect regulation and privacy from day one

- Earn trust through validation, monitoring, and oversightIf you follow this, AI becomes a reliable tool. If you do not, it becomes an expensive pilot.

One-page adoption checklist (copy/paste)Use case and workflow

- [ ] One narrow decision point identified

- [ ] User defined (role + setting)

- [ ] Output is actionable (clear action owner)Value and metrics

- [ ] Baseline metric recorded (before AI)

- [ ] Success metric defined (time, errors, outcomes, cost)

- [ ] Pilot duration and rollout gates definedData and integration

- [ ] Data access approved (sources + permissions)

- [ ] EHR/PACS integration plan defined

- [ ] Downtime workflow documentedValidation and safety

- [ ] Realistic validation dataset chosen

- [ ] Prospective or in-workflow evaluation planned (when possible)

- [ ] Subgroup performance tested

- [ ] Automation bias mitigations designed (UI, training, uncertainty)Privacy and regulation

- [ ] HIPAA/GDPR requirements mapped

- [ ] Vendor risk and contracts reviewed

- [ ] Regulatory path considered (SaMD / EU high-risk)

- [ ] Update plan defined (PCCP-style change control)Monitoring and governance

- [ ] Drift monitoring plan live

- [ ] Audit logs enabled

- [ ] Incident response playbook ready

- [ ] Post-launch review cadence scheduled

Have a query ? Reach us at support@studyconnect.org.