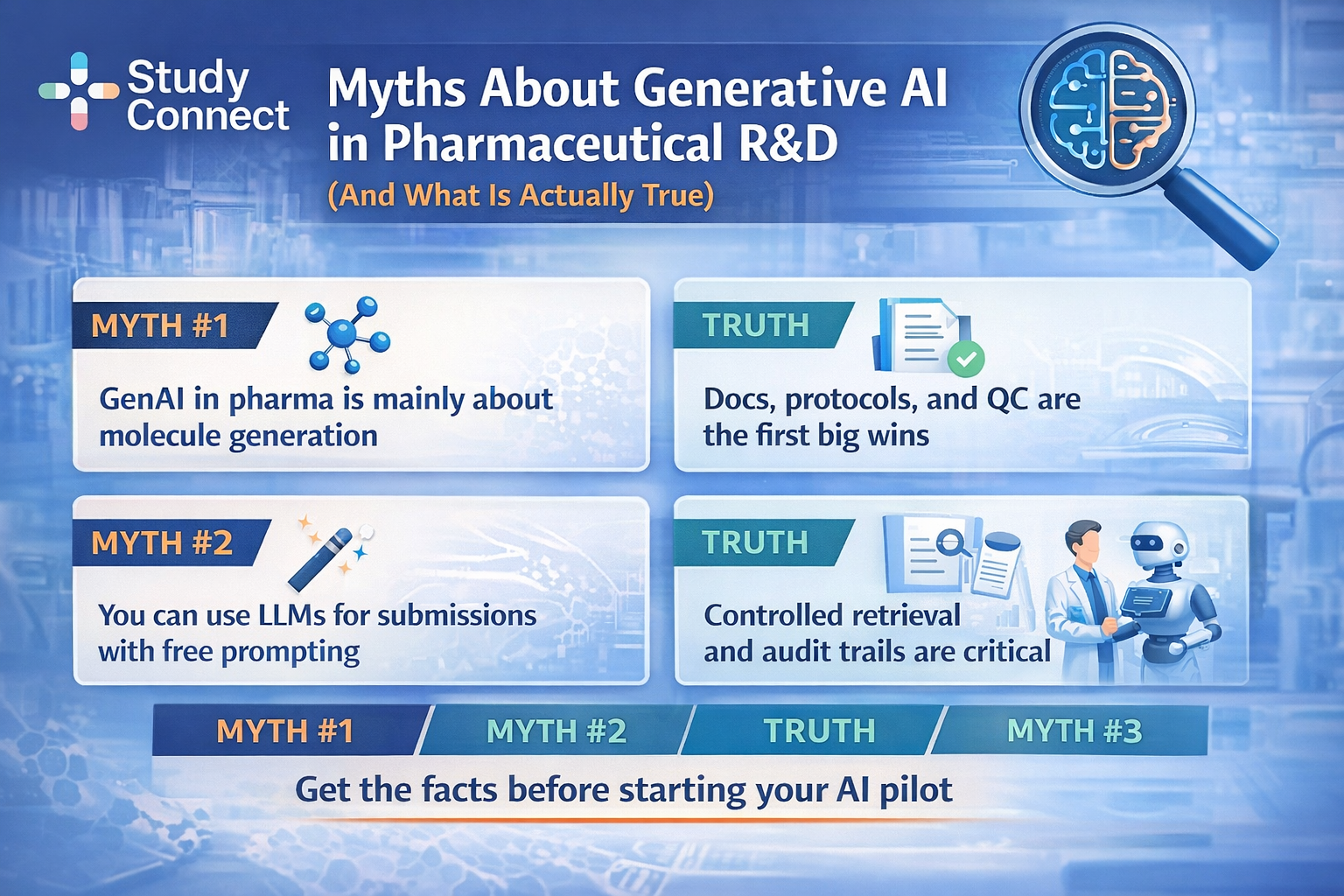

Generative AI use cases in pharmaceutical R&D

Generative AI is already reshaping pharmaceutical R&D , but not in the flashy, headline-driven way most people imagine. The real transformation isn’t about replacing scientists or automating discovery overnight. It’s showing up in quieter, more operational wins: faster protocol drafting, fewer review cycles, and fewer inconsistencies that surface too late in the process. When a single protocol iteration can be compressed from eight weeks to two, the downstream impact is enormous. Trial start timelines accelerate. Teams spend less time untangling avoidable errors. And R&D organizations gain something increasingly rare: speed without sacrificing rigor. GenAI is no longer a lab experiment or a “pilot project.”

It’s rapidly becoming workflow infrastructure , embedded into how modern clinical teams write, review, and operationalize complex documentation. Budgets are shifting, leadership attention is growing, and enterprise rollouts are already underway. The question is no longer whether pharma should explore GenAI. The real question is: How do we adopt it safely, responsibly, and compliantly in GxP environments? This blog explores exactly that.

The biggest bottleneck in clinical development is rarely the first draft. The real challenge is the repeated protocol revision cycles across clinical, operations, biostatistics, and regulatory teams. R&D timelines slip because of rework: protocol structure shifts after review, objective–endpoint misalignment is found late, key documents like the CSR, IB, and CTD drift out of sync, and copy-paste reuse introduces silent inconsistencies. What’s most exciting about GenAI is the near-term ROI in compressing these documentation-heavy cycles. When protocol iteration shrinks from eight weeks to two, teams can unblock downstream work earlier , from vendor setup and country feedback to site outreach and database planning , accelerating trial start timelines in a meaningful way.

This is not theoretical. Leaders are investing because documentation and alignment are expensive, slow, and measurable.

A useful signal is budgeting intent. A Bain & Company survey reported that 40% of pharma executives said they were baking expected GenAI savings into their 2024 budgets , nearly 60% had moved beyond ideation into building use cases and 55% expected multiple PoCs or MVP builds by the end of 2023. Source: Bain press release

Public messaging from large pharma is also consistent: GenAI is being used as drafting support plus QC support, not autonomous decision-making.

- Pfizer has confirmed GenAI use across R&D, including clinical design and documentation support themes.

- Novo Nordisk, through a Microsoft partnership, has discussed GenAI use in regulated documentation workflows with compliance guardrails.

- Merck, in collaboration with McKinsey, has described building GenAI tools to support authoring clinical-study documents.

Generative AI use cases across the pharma R&D lifecycle

GenAI works best when it drafts and checks, while humans decide. The reliable pattern is: structured inputs -> constrained generation -> automated QC -> human approval. Below are the highest-value use cases by R&D stage. GenAI helps discovery teams explore hypotheses faster, but it must be paired with validation. This is where models can generate candidates and prioritize options. It is not where you should trust narrative reasoning without evidence.

Use case 1: Target hypothesis generation

In this use case, GenAI supports early-stage target discovery by combining evidence from omics datasets, pathway databases, phenotype data, curated scientific literature, and internal research reports. The model can generate structured target hypotheses with supporting evidence links, build “pro vs con” maps to assess target tractability, and draft experiment proposals for validation. The main impact is faster hypothesis triage and higher throughput of target rationale drafting. However, key risks include hallucinated biology and cherry-picking evidence, so strong controls are required—such as retrieval-only generation (RAG) over approved sources, mandatory citations for every mechanistic claim, and human review for all causal conclusions.

Use case 2: Hit triage

Here, GenAI helps teams interpret assay results, hit lists, compound annotations, and lab notebook context, including prior failures. It can summarize assay outcomes, suggest next experiments using structured templates, and draft decision memos explaining why certain hits are advanced or dropped. The impact is shorter “data to decision” cycles and more consistent documentation of experimental reasoning. To maintain traceability, outputs should be structured rather than free text, and every summary statement must link back to a specific data record. Tools like Benchling often serve as the system of record for experimental results and sample tracking, making structured lab context essential for reliable GenAI support.

Use case 3: De novo molecule/protein design

In de novo design workflows, GenAI can generate candidate molecules or protein sequences using inputs such as target structural information, binding site constraints, known actives and inactives, and ADMET priors. The goal is to accelerate design cycles and explore a larger candidate space with fewer wet-lab iterations. Public proof points include the GENTRL generative model study on DDR1 kinase, where novel inhibitors were designed in just 21 days, with multiple compounds validated in biochemical and cell-based assays and one showing favorable pharmacokinetics in mice. AlphaFold has also had major downstream impact—not by drafting hypotheses, but by transforming access to structural priors. In CASP14, AlphaFold2 achieved near-experimental accuracy, scoring a median GDT_TS of 92.4 versus 72.8 for the next-best method, and 87.0 versus 61.0 in free-modelling targets. Despite these advances, validation pipelines remain mandatory: AI-generated candidates must be paired with physics-based evaluation, docking, and property prediction. Tools like Schrödinger are widely used to test plausibility, reinforcing the principle that GenAI proposes, but physics-based validation determines feasibility. AI-native companies such as Exscientia and Insilico have helped make these workflows board-visible, even though most pharma teams still adopt them cautiously.

AlphaFold’s downstream impact is significant: while it is not a GenAI system that drafts hypotheses or generates text, it has fundamentally changed how drug discovery teams access structural priors by providing highly accurate protein structure predictions. In the CASP14 benchmark, AlphaFold2 achieved a median GDT_TS score of 92.4 across all targets compared to 72.8 for the next-best method, and in the especially challenging free-modelling category it scored 87.0 versus 61.0, demonstrating near-experimental accuracy. Source: AlphaFold CASP14 FAQ. However, even with these advances, validation pipelines remain essential—generation alone is not sufficient. AI-generated molecules or design proposals must be paired with physics-based evaluation, docking, and property prediction to ensure biological and chemical plausibility. Tools like Schrödinger are widely used for this purpose, serving as a key validation layer where GenAI can propose candidates but Schrödinger rigorously tests feasibility. Industry examples such as Exscientia and Insilico have helped make these AI-native workflows more visible at the board level, even though most pharmaceutical organizations still adopt them cautiously and with strong controls.

Preclinical- Study planning, ADMET Summaries, and Experimental Reasoning Support

Preclinical GenAI wins are most visible when teams use it to summarize evidence, reduce repetitive template work, and standardize study planning. In practice, the biggest value does not come from flashy molecule generation, but from improving traceability and accelerating documentation-heavy workflows. GenAI can help teams move faster by drafting structured content, but interpretation and final scientific judgment still require experienced toxicology and pharmacology review

One of the strongest early applications is drafting study rationales from existing templates. Instead of starting from a blank page, teams can use GenAI to generate a structured first draft based on approved internal formats, while also pulling supporting statements from prior validated reports. Another high-impact area is ADMET and toxicology evidence summarization, where models can synthesize findings across multiple studies and produce structured risk tables that experts can review more efficiently. GenAI is also useful in generating study plan shells—such as objectives, design, endpoints, and sample handling procedures- while mapping them directly to internal SOP-aligned templates.

To make these workflows effective, organizations typically need the right inputs. This includes historical study reports and datasets, prior study plans and rationales, internal SOPs and templates, and compound or biologic profile summaries. Without these grounded materials, outputs become unreliable and difficult to defend in regulated settings.

The safest implementations rely on retrieval-grounded generation, where every output is traceable back to approved sources. Teams see the best results when they use RAG over validated internal documents, block unsupported claims entirely, and require citations for every scientific statement. Human review remains essential for interpretation and conclusion-level decisions, and strong governance requires logging prompts, source documents, and output versions to maintain audit-ready traceability.

Clinical development: protocol writing, feasibility narratives, and patient stratification

Clinical development is where GenAI often pays back fastest. That is because protocols and related documents drive many downstream activities. Fewer revision loops can mean earlier trial execution.

Use Case 1: Protocol Drafting Aligned to ICH M11

GenAI is highly effective in creating structured protocol drafts aligned to ICH M11. When grounded in validated templates and prior protocols, it can generate a protocol shell and draft sections using approved internal language.

Inputs typically include:

- Target Product Profile (TPP)

- Prior protocols and endpoint libraries

- Inclusion/exclusion libraries

- Operational and country constraints

Where it helps:

- First structured draft generation

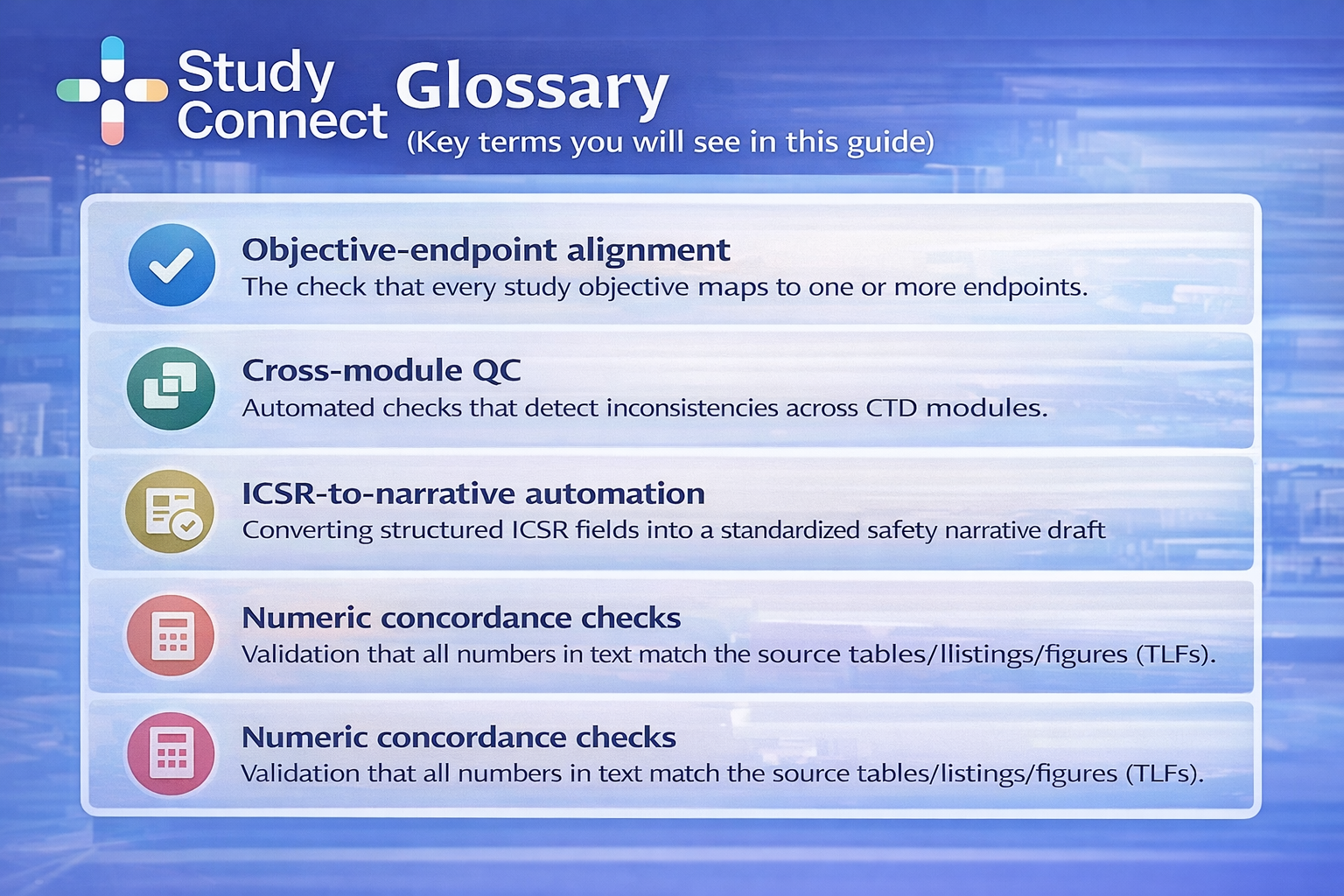

- Objective–endpoint alignment checks

- Improved section completeness

Controls: Constrained templates, automated consistency checks, and human ownership of final scientific intent.

Use Case 2: Feasibility & Site Selection Narratives

GenAI can draft feasibility narratives that explain why a trial design is enrollable across geographies, based on historical performance data and operational assumptions.

Inputs:

- Site performance and enrollment benchmarks

- Competitive landscape

- I/E constraints and visit burden

Impact:

- Faster narrative production

- Fewer feasibility iteration cycles

Controls: De-identification, privacy safeguards, and audit logs of data used.

Use Case 3: Patient Stratification (Concept Stage Only)

GenAI can summarize biomarker hypotheses and prior subgroup signals to support early stratification discussions.

Inputs:

- Biomarker rationale

- Prior subgroup findings

- Operational assay constraints

Use cautiously: This is concept drafting only. Final analysis strategies must be owned by human statisticians.

GenAI adds the most value when it standardizes structure, reduces template work, and keeps outputs traceable while humans retain accountability for interpretation and regulatory decisions

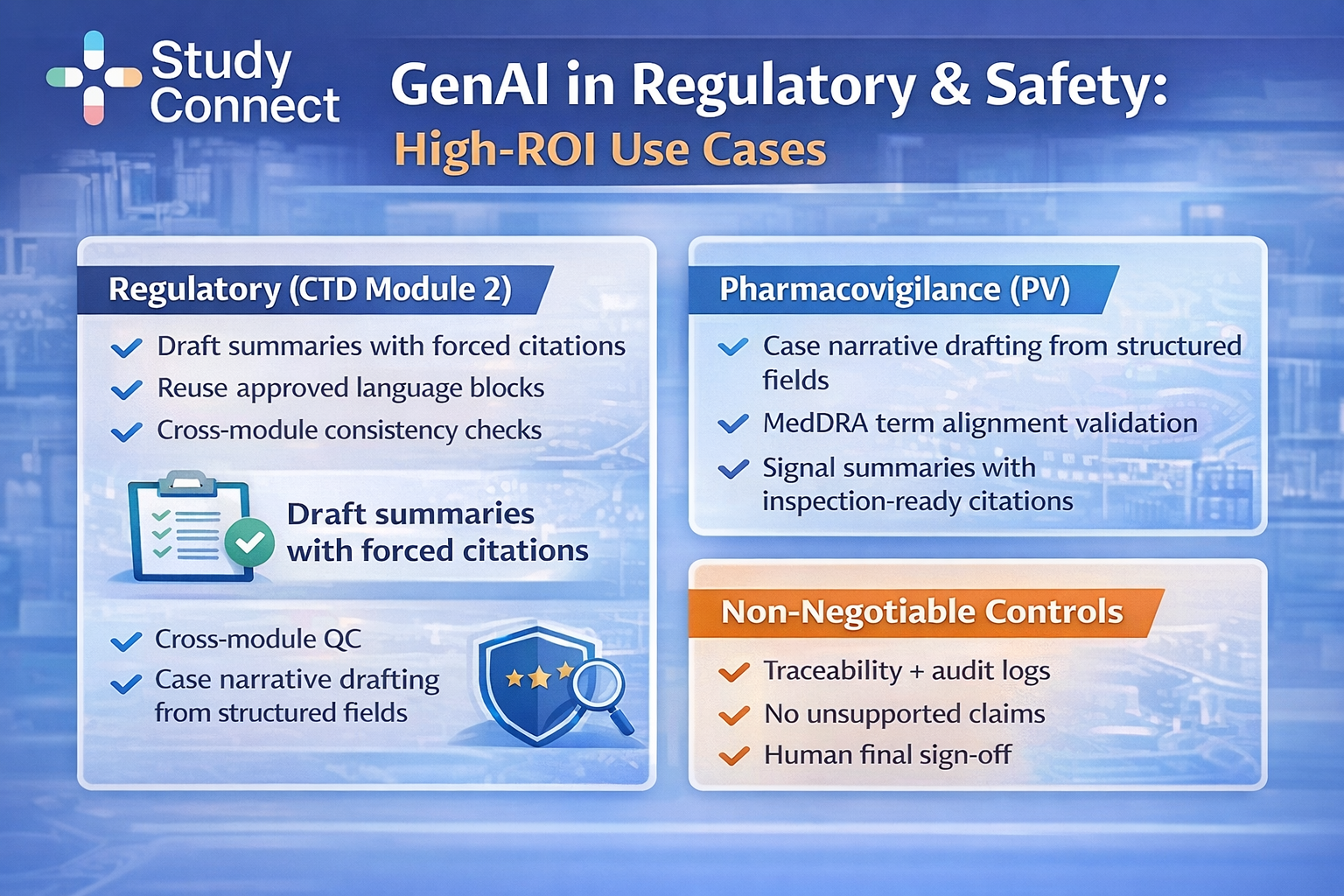

Regulatory: CTD Module 2 summaries, Submission Drafting, and Cross-Module QC

Regulatory writing is one of the highest-ROI areas for GenAI because workflows like CTD Module 2 drafting are repetitive and highly structured. The value comes from faster drafting and earlier detection of inconsistencies — but only when outputs are fully traceable.

High-impact regulatory use cases include

- CTD Module 2 clinical and nonclinical summary drafting (with forced citations)

- Controlled reuse across IND/CTA/CSR/IB to improve consistency

- Cross-module QC to flag mismatches before review

Strong systems reduce review cycles. Weak systems create inspection risk by inventing unsupported claims. McKinsey has reported that companies applying AI to regulatory submission processes can achieve 30-40% reductions in document preparation effort, and in some workflows process costs up to 50%, when drafting and structuring are accelerated with guardrails (as commonly cited in McKinsey discussions on GenAI-enabled document workflows). I agree with the direction of that claim, but teams should be skeptical of any single headline number: the biggest gains show up when you reduce review cycles and prevent inconsistency defects, not when you ask a model to "write better."

Controls that matter

- Traceability and audit logs for every claim

- Forced citations to approved internal sources

- Version control across drafts and review actions

- Enterprise automation note: platforms such as Yseop are used in regulated environments to automate generation and quality control for complex regulatory and clinical documents. The key is not the brand. It is the workflow pattern: templates, traceability, QC, and human approval.

Safety and PV: case narratives, signal summaries, and inspection-ready documentation

PV is where zero tolerance for invention becomes real. GenAI can draft narratives, but it must be locked to structured fields. If you cannot prove every statement, you should not ship it.

Use case 1: Case narrative drafting from ICSR fields

- Inputs: ICSR structured fields; MedDRA coding; Concomitant meds, labs, timelines.

- Where GenAI fits: Build an event timeline from fields; Draft narrative in a locked template.

- Impact metrics: Reviewer edit time reduction; Cycle time per narrative.

- Benchmark: Manual case narratives may take 30-60 minutes each (common operational baseline across PV teams). AI-assisted drafting can reduce drafting time, but still requires human oversight and final approval.

- Controls: Locked templates; Chronology validation; No new facts rule.

Use case 2: MedDRA alignment and consistency checks

- Where GenAI fits: Validate narrative terms match MedDRA-coded terms; Flag discrepancies between coded PT/LLT and narrative wording.

- Controls: Automated checks + human PV review; Clear escalation when mismatches occur.

Use case 3: Signal summary drafting

- Where GenAI fits: Summarize evidence with citations; Draft structured signal assessment notes.

- Controls: No causal language without statistical or clinical justification; Human safety physician sign-off.

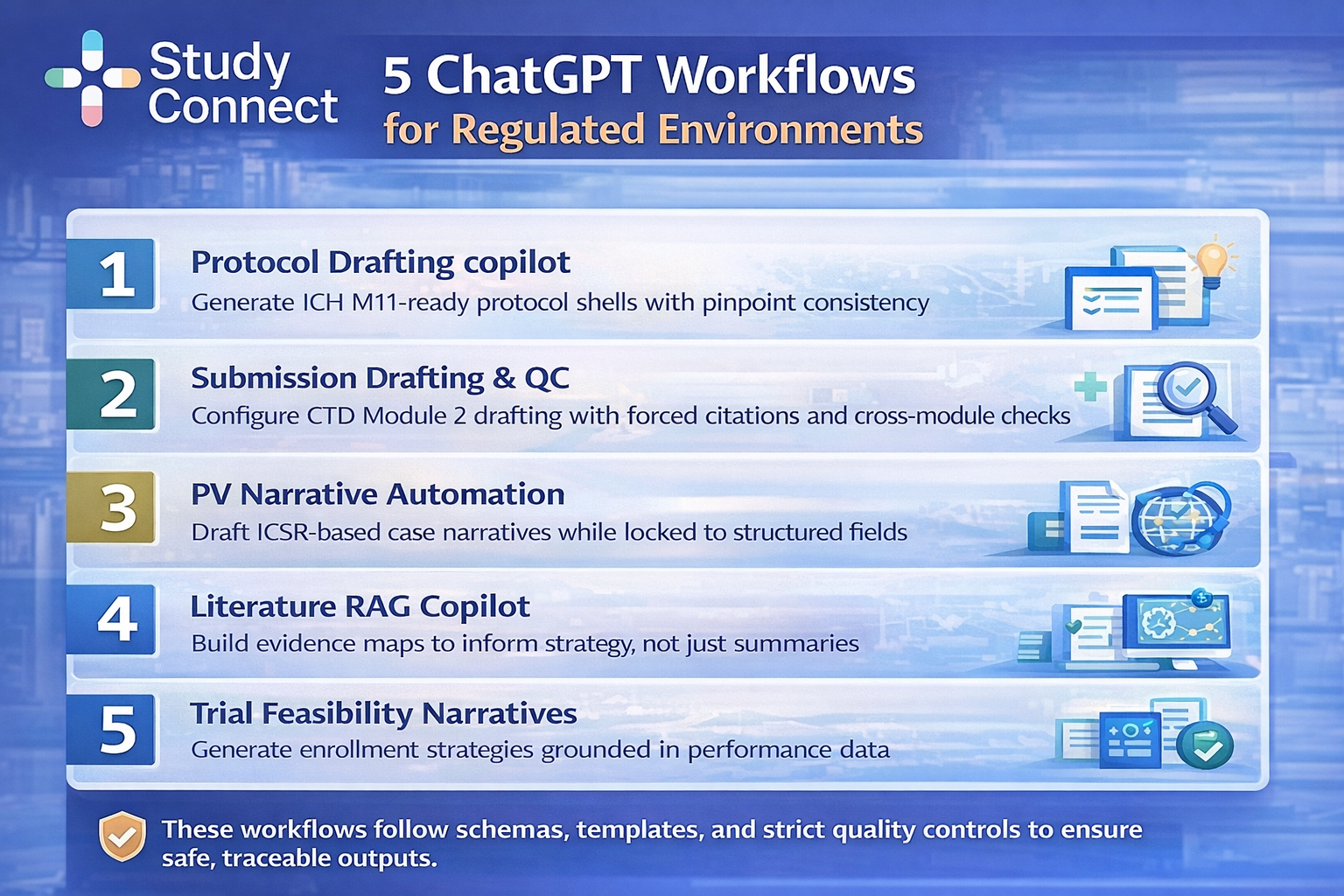

ChatGPT workflows

These workflows work because they are constrained. They do not rely on chatting. They rely on schemas, templates, and QC. I treat GenAI as a drafting accelerator and quality-control copilot, not a decision-maker.

Workflow 1: Protocol drafting copilot

You can get value fast when you generate a protocol shell correctly and reduce structural rework. The win is fewer alignment loops and fewer late surprises. This is one of the most reproducible GenAI workflows in clinical development.

- Inputs: TPP (target population, claims, differentiation); Indication, phase, region assumptions; Prior approved protocols; Endpoint library and I/E library; Schedule of Assessments patterns; Operational constraints (visit windows, vendors).

- Where GenAI fits: Generate an ICH M11-aligned shell; Draft sections using template language blocks; Produce an objective-endpoint alignment table; Flag mismatches (objective, endpoint, estimand hints, SoA).

- Benchmarks to track: % of required ICH M11 sections populated correctly; Objective <-> endpoint alignment accuracy; Inclusion criteria <-> assessment schedule consistency; % of AI content retained after human review; Number of major revision cycles required.

Mini case note (what I see in practice): Repeated revision cycles are the real bottleneck, not the first draft. When you constrain drafting to templates and run consistency checks early, teams often cut structural revision loops by around half. The practical goal is compressing the cycle, like moving from an 8-week iteration pattern toward 2 weeks for a structured draft and first alignment pass, depending on governance maturity.

What is an ICH M11-ready protocol structure?An ICH M11-ready protocol structure means your protocol follows a standardized, regulator-friendly organization. It is designed so sections are consistent, reusable, and easier to review across teams and regions.In practice, "M11-ready" also means your content is more machine-checkable:

- Objectives, endpoints, estimands, and assessments are clearly mapped

- Reused language blocks are controlled

- Cross-references are stable enough for QC tools to validate

This matters for GenAI because a model performs best when it fills a known structure. It reduces free-text creativity and increases consistency, which is what regulated work needs.

Workflow 2: Submission drafting and QC

Module 2 drafting is where most teams feel immediate time pressure. GenAI can accelerate summaries, but only when every claim is traceable. This workflow is about drafting fast, proving everything, then running QC.

- Inputs: CSRs, nonclinical reports, SAP outputs; Approved language libraries; CTD section templates; Reference libraries and internal document IDs.

- How to do it safely: Retrieve relevant source passages (approved only); Draft each subsection with forced citations; Run cross-section validators (objective <-> endpoint <-> SAP mismatch checks; dose/regimen consistency checks; safety statement drift checks across IB and CSR); Human review and sign-off; Store prompt, sources, outputs, and reviewer actions.

- Success metrics: 100% traceable claims to source documents; Reduced reviewer edits per 1,000 words; Reduced cross-module inconsistencies discovered late; Zero fabricated references (non-negotiable).

What is forced-citation RAG for submission drafting?Forced-citation RAG is retrieval-augmented generation where the system requires a citation for every meaningful claim. If a sentence cannot be linked to a retrieved approved source, it should not be generated or it should be flagged.This is different from normal prompting because it changes the default behavior:

- The model is not answering from memory

- It is writing from retrieved evidence, with a visible audit trail

In regulated submission drafting, this is the simplest rule that improves safety: If it is not traceable, it does not ship.

Workflow 3: PV narrative automation

PV narratives are structured and repetitive, which makes them automatable. They are also high-risk if you allow the model to invent details. This workflow must be template-locked and field-grounded.

- Inputs: ICSR structured fields; MedDRA-coded terms; Key dates, labs, concomitant meds; Reporter verbatims (if applicable).

- Safe workflow: Extract required fields into a fixed schema; Build a chronological timeline; Generate narrative using a locked template; Validate (chronology matches dates; MedDRA terms match coded PT/LLT; no new facts introduced); Human PV reviewer approval.

- Benchmarks: Chronology accuracy rate; MedDRA consistency pass rate; Reviewer edit time per narrative; Fabrication rate must be zero.

- A practical baseline: many teams spend 30-60 minutes per narrative. AI can reduce drafting time, but only if the review step remains mandatory.

What is MedDRA term consistency validation in PV narratives?MedDRA term consistency validation checks that the narrative text matches the coded safety terminology. If the ICSR is coded with a specific Preferred Term (PT), the narrative should not drift into different terms that change meaning.This matters because inconsistency creates inspection risk:

- It can look like the narrative is describing a different event

- It can confuse signal detection and case reconciliation

A simple implementation is: detect event terms in narrative -> compare them to coded MedDRA fields -> flag mismatches for PV reviewer review.

Workflow 4: Literature RAG copilot

A literature copilot should build evidence maps, not just summaries. Most summary-only approaches miss gaps and overfit to the last paper retrieved. RAG works best when you ask targeted questions and track coverage.

- Inputs: PubMed results, guidelines, label information; Internal reports and slide decks (approved); A structured question list (PICO-style is helpful).

- Workflow: Build retrieval over validated sources; Answer targeted questions with citations; Output an evidence map (what is known, what is uncertain, what data is missing); Review by a scientific lead.

- Benchmarks: Citation accuracy rate; Time-to-answer for standard queries; Coverage of key evidence gaps (tracked as a checklist).

- This is also a good low-risk pilot because it can be done without patient-level data.

Workflow 5: Trial feasibility & site selection narrative

Feasibility narratives often become opinion battles because they lack consistent structure. GenAI helps by producing a standardized narrative grounded in performance data. It should never guess enrollment feasibility without inputs.

- Inputs: Prior site performance metrics; Enrollment rates by region; Screen failure rates for similar trials; Competitive environment and trial density; Protocol constraints (I/E, visits, procedures).

- Workflow: Generate a narrative with: key feasibility assumptions, risks and mitigations, rationale by geography; Attach evidence references to every claim; Run privacy checks and log access.

- Metrics: Time saved per feasibility draft; Fewer iteration loops with ops and clinical; Consistency with protocol constraints (measured by rule checks).

- Controls: Data privacy and de-identification; Role-based access control; Full audit logging.

Governance and validation

You do not add compliance later in regulated GenAI. You design the workflow so it is auditable from day one. This section is the difference between a pilot and a deployable system.

Top 5 failure modes in CTD/CSR/IB/PV

- Fabricated references – Risk: invented study IDs, citations, or regulatory precedents. Mitigation: RAG-only + forced citations + automated reference cross-checking + human numeric review.

- Cross-section inconsistencies – Risk: objectives not matching endpoints, endpoints not matching SAP, IB risk statements drifting from CSR safety narrative. Mitigation: template-based generation + schema outputs + cross-document validation pass.

- Overconfident interpretation – Risk: causal language ("proves," "demonstrates") without support. Mitigation: tone constraints + red-flag language detection + lock interpretation sections for human-only drafting.

- Data leakage – Risk: confidential compounds, trial designs, or PHI exposed through public tools. Mitigation: enterprise isolation (VPC/secure environment) + de-identification + RBAC + encryption.

- Loss of auditability – Risk: AI text inserted without prompt logs, sources, version history, or reviewer trace. Mitigation: audit logs + version control + SOPs + human approval checkpoints.

The Digital Medicine hallucination and omission findings are a useful reminder that errors do happen in real text generation: 1.47% hallucination and 3.45% omission rates were observed in clinical note generation, and workflow iteration improved major error rates significantly.

The minimum safe operating model is simple and strict. It is also the model that scales.

- Controlled retrieval (RAG): Only approved sources; Clear source allowlists.

- Structured outputs: JSON or schema output first; Narrative rendering second.

- Automated QC: Citation checks; Numeric concordance checks; Cross-module consistency checks.

- Human sign-off: Humans own scientific conclusions; Humans own regulatory positioning.

Clear boundary rule:

- AI can draft: shells, summaries with citations, template sections, consistency flags.

- Humans must own: interpretation, benefit-risk framing, final submission intent.

Validation and documentation: Audit Trails, SOPs, and Inspection Readiness

Inspection readiness is mostly documentation discipline. You need to prove what was generated, from what, by whom, and when. Log at minimum:

- Prompt and system instruction versions

- Retrieved sources (document IDs, versions)

- Model version and configuration

- Output text and structured output

- Reviewer edits and approvals

- Final inserted content and document version

Write SOPs that define: what tasks can use GenAI, what tasks are prohibited, required QC checks, required human roles for review, and disclosure policy inside the organization. If you cannot audit it, you cannot defend it.

Moving from hype to reality: what's working, what's not, and what to measure

The hype is not helpful. Measured workflows are helpful. Discovery results are exciting. The GENTRL DDR1 result showed novel inhibitor design in 21 days with assay validation and favorable mouse PK for one lead. AlphaFold2's CASP14 performance also changed structural prediction quality, with median GDT_TS 92.4 across all targets.

But in most large organizations, the first repeatable ROI is documentation:

- Protocols, CSRs, IBs, CTD Module 2 summaries

- Consistency checking across submission packages

- PV narrative first drafts and validation

- As noted earlier, 40% of pharma executives reported baking expected GenAI savings into 2024 budgets.

Impact metrics that executives care about

- Time: Cycle time to first structured draft (days); Cycle time to approved protocol version (weeks); Time per PV narrative (minutes).

- Cost: Effort hours per document section; External writing/vendor hours reduced.

- Quality: Revision rounds (target down); Reviewer edits per 1,000 words (target down); Inconsistency rate across documents (target down).

- Risk (must-have): Citation traceability rate (target 100% for claims); Numeric concordance pass rate; Fabrication rate (must be zero).

Disadvantages of AI in the Pharmaceutical Industry

- Hallucinations and omissions: Control with RAG + forced citations + red-flag language detection + human review.

- Bias and uneven evidence coverage: Control with evidence map approach + coverage checklists + reviewer challenge process.

- Privacy and confidentiality risk: Control with de-identification + enterprise isolation + RBAC + logging.

- Model drift and inconsistent outputs: Control by locking model versions for validated workflows + regression testing on a gold set.

- Tool sprawl: Control with one controlled platform pattern + SOPs + centralized logging.

- Change management: Control with role clarity (AI drafts, humans decide) + training + measurable KPIs.

My take: Multimodal clinical copilots that combine imaging, labs, and clinician dictation will be valuable, but most companies are not ready to govern them. Start by getting text workflows inspection-ready first.

Practical starter kit

You can make progress in 30 days without taking unsafe risks.

Prerequisites

- A controlled document repository (approved sources and versioning).

- A basic template library (protocol sections, Module 2 structures, PV templates).

- Privacy process for any sensitive inputs (de-identification rules).

- Named human reviewers and sign-off roles.

- Agreement on "no citation, no ship" for regulated outputs.

Pick 1-2 low-risk pilots

- Literature RAG copilot for evidence mapping (no patient-level data).

- Protocol shell drafting using templates (drafting support, not final decisions).

- Synthetic or aggregated feasibility narratives for early planning (avoid PHI).

- Why these are safer: You can keep sources controlled, measure citation accuracy, and reduce operational rework without touching final regulatory interpretation.

- Peer reality check: Novo Nordisk at the forefront of using GenAI in regulatory medical writing

Define success metrics and a review process before writing any code

- Baseline metrics (before): Time per protocol section draft; Number of review rounds per protocol; Inconsistency count found late; Time per PV narrative.

- Acceptance thresholds (after): Citation traceability rate: target 100%; Numeric concordance: target 100%; Fabrication rate: target 0.

- Human roles: Medical writer (owns draft quality); Clinician (owns scientific intent); Regulatory reviewer (owns compliance tone); PV reviewer (owns narrative correctness).

Tooling Checklist: Best AI tools for Pharmaceutical Industry

- System of record for lab and R&D data: Benchling as an ELN + sample tracking platform.

- Scientific validation engines for discovery: Schrodinger for docking and simulation.

- AI-first hit finding layer: Atomwise for deep learning-based virtual screening concepts.

- RAG layer + enterprise LLM: Needs allowlists, access control, and citation enforcement.

- Document management + version control: Needs review workflows and approvals.

- QC and consistency engine: Needs cross-module and numeric concordance checks.

- Redaction and de-identification: Needs PHI detection and masking.

- Visual communication tools: BioRender helps teams communicate compliant workflows clearly.

How StudyConnect helps teams de-risk pilots with the right experts

GenAI pilots fail when teams cannot get fast, credible review from the right humans. StudyConnect helps teams quickly find verified experts who can:

- Review protocol drafts for clinical realism and feasibility

- Challenge endpoint choices and operational burden early

- Validate PV narrative templates and safety wording expectations

- Stress-test regulatory workflows and SOPs for inspection readinessHave any queries reachout to us at support@studyconnect.org.

GenAI in pharma R&D is real, but the value is not evenly distributed. Discovery generation is exciting, yet most teams get their first reliable ROI in documentation and QC. My bias: if you are deciding where to place your first serious bet, put it on protocol and Module 2 drafting with forced-citation RAG and consistency checks. That is where the effort is predictable, the outputs are measurable, and the risk can be controlled. If you treat GenAI as infrastructure (RAG, templates, QC, and human accountability), you can move fast without increasing compliance risk.

For any further questions reach out to us at support@studyconnect.org