Generative AI in Healthcare: Expert-approved Deep Dive

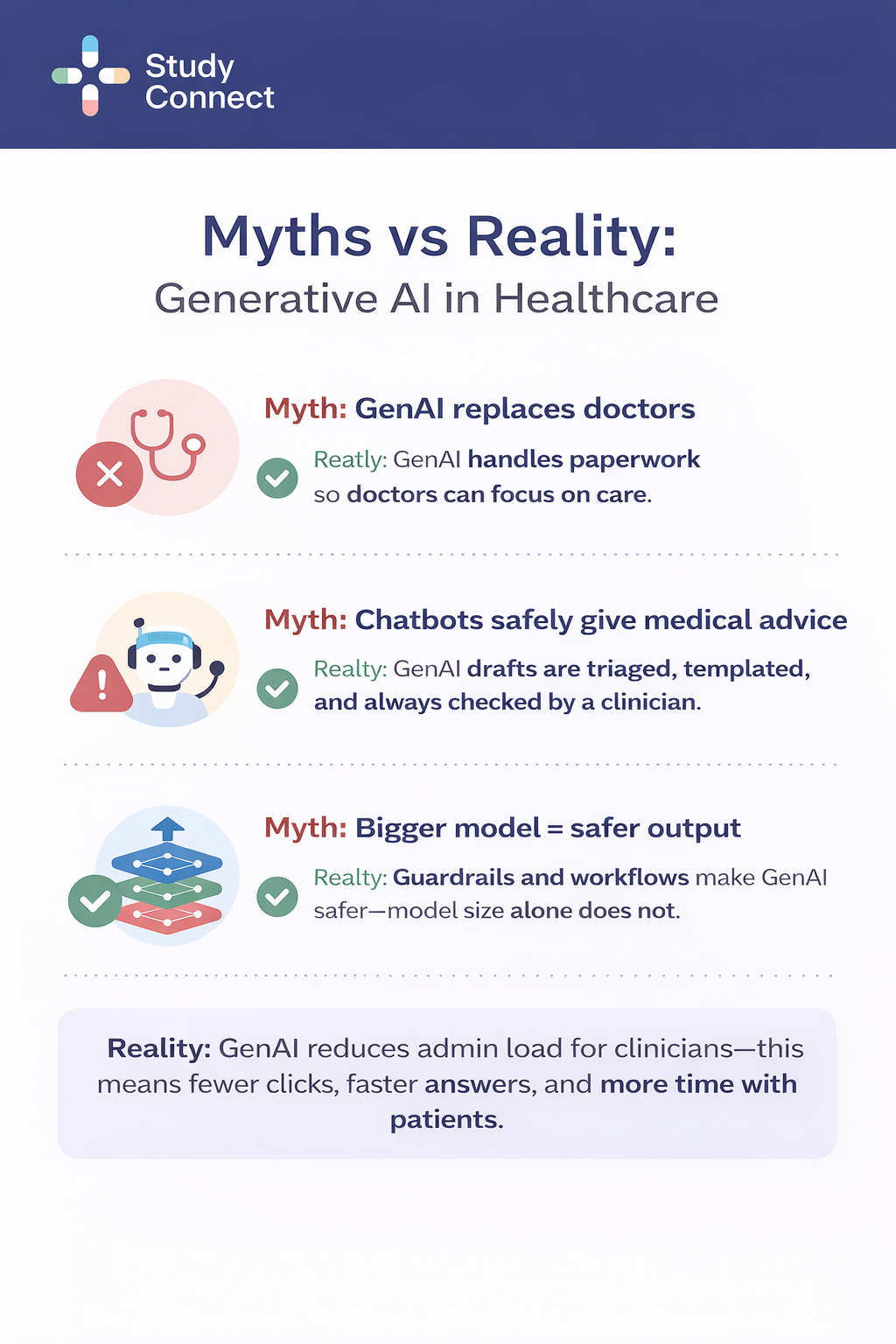

Generative AI is already useful in healthcare, but not in the way most headlines suggest.In real hospitals and clinics, it mostly drafts text and helps staff find information faster. The safest pattern is consistent: the model drafts, a human decides, and nothing is sent or saved without review.

Where generative AI works today in Healthcare

Generative AI works best when the "product" is a draft, not a medical decision. That is why the strongest deployments live inside documentation, summaries, inbox workflows, and admin writing.Most serious deployments treat genAI like a high-speed junior assistant.It prepares a first draft, surfaces relevant context, and suggests structure.

But the clinician or staff member remains the accountable decision-maker:

- A clinician reviews and signs notes before they land in the chart.

- A nurse or clinician verifies meds and follow-ups before discharge instructions go out.

- A billing or PA specialist approves and submits prior auth packets.

- A triage workflow routes risky patient messages before any advice is drafted.

This "draft-first, approval-always" stance is not optional. It reduces hallucination harm, privacy mistakes, and liability.

What generative AI means in Healthcare ?

Generative AI is best understood as a writing and summarizing engine. It helps with language-heavy work that humans must still verify.

What is artificial intelligence in medicine vs generative AI

Artificial intelligence in medicine is a broad term. It includes any system that performs tasks that normally need human intelligence. In practice, healthcare AI often splits into two buckets:

Predictive AI (classify/score):

- Predict readmission risk

- Flag sepsis risk

- Classify a clinical condition from signals

Generative AI (create text):

- Draft a SOAP note from a transcript

- Summarize a chart for pre-op review

- Draft a reply to a portal message

- Generate a prior auth appeal letter using chart evidence

Why language tasks are the best fit in healthcare

Healthcare is full of text work that is Repetitive , High volume , Easy to measure (minutes saved, closure rates, turnaround times) , Safer to constrain (templates, citations, human sign-off). Adoption is already visible in surveys.

For example, in a physician survey reported by the AMA, 21% of physicians said they used AI for documentation of billing codes/medical charts/visit notes, and 20% for discharge instructions/care plans/progress notes (2024 survey responses, AMA article dated Feb 26, 2025: "2 in 3 physicians are using health AI...") (https://www.ama-assn.org/practice-management/digital-health/2-3-physicians-are-using-health-ai-78-2023). These are exactly the language-heavy categories where genAI fits.

Where generative AI is dangerous or overhyped

Unsafe GenAI patterns tend to appear quickly when teams rush into pilots without the right safeguards. Common failure modes include deploying “answer anything” chatbots for patients or clinicians with no grounding, auto-sending patient instructions or portal replies without human review, and making factual claims without citations or source links. Other risks come from failing to monitor for drift, repeated error clusters, or missed red flags, as well as pulling too much context, which can increase privacy exposure and PHI leakage. Teams also often over-trust fluent outputs, where hallucinations and omissions can sound confident, and overlook the need for strong permission controls to prevent cross-patient or restricted-policy retrieval. The key reality check is that even in carefully studied settings, errors still happen—so workflow guardrails matter as much as the model itself.

A 2025 study on transcript-to-note generation used 450 primary-care consult transcript-to-note pairs with 12,999 clinician-annotated note sentences and 49,590 transcript sentences. It found hallucinations in 1.47% of note sentences (191/12,999), and 44% of hallucinations were "major" (could affect diagnosis/management). Omissions occurred in 3.45% of transcript sentences (1712/49,590). Hallucination types included fabricated content (43%), negations (30%), contextual (17%), and causality (10%). (Nature npj Digital Medicine, 2025) (https://www.nature.com/articles/s41746-025-01670-7.pdf). That is why healthcare genAI must be designed to show evidence, constrain output, and require review.

Use cases where generative AI works today (grouped by workflow)

Ambient Documentation / AI Scribes

Ambient scribes convert clinical conversations into structured draft notes (often SOAP format). This is currently the highest-adoption GenAI workflow because the time savings are immediate.GenAI fits well here because visits are structurally repetitive, even if wording varies. Clinicians frequently struggle with the “blank page” problem, and documentation burden is measurable. In one outpatient quality improvement study (baseline April–June 2024, reassessed after 7 weeks of use):

- Time in notes per appointment dropped from ~10.3 → 8.2 minutes (20% reduction)

- Same-day note closure improved from 66.2% → 72.4%

- After-hours documentation decreased from ~50.6 → 35.4 minutes/day (30% reduction)

Adoption is no longer experimental. 42% of medical group leaders report their organization uses an ambient AI solution (MGMA, Summer 2024):

What humans control: editing, signing, confirming plans, correcting inaccuracies.

What makes it safe: transcript-backed evidence snippets, structured templates, mandatory review.

Evidence snippets are especially important. Each drafted line should be supported by a transcript excerpt visible in the UI. If a claim has no snippet, it should be treated as an unverified draft — not chart-ready text.

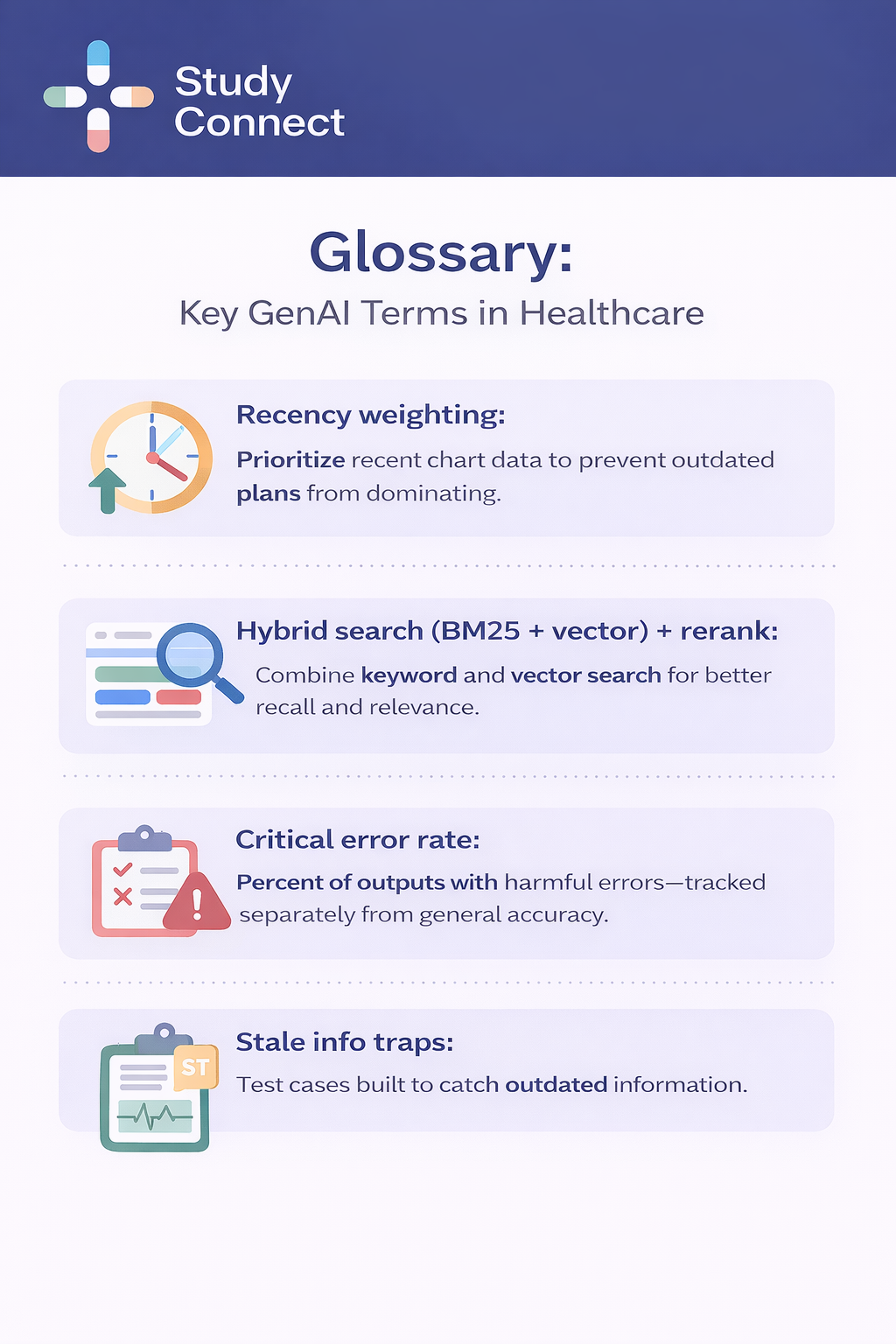

Chart summarization Inside the EHR (review support, not decisions)

EHR summarization helps clinicians orient quickly, especially during care transitions, and should be treated as review support rather than clinical decision support. Its value comes from reducing the time spent searching through long, fragmented charts by providing purpose-built summaries for common needs such as ED handoffs, pre-operative history reviews, discharge planning snapshots, and oncology timelines across encounters. GenAI fits well here because charts are repetitive and dense, and the goal is not to make decisions but to surface relevant context faster. Clinicians still remain fully responsible for verifying information against original sources, deciding what is clinically important, and correcting omissions before acting. Safe EHR summarization depends on strong guardrails, including summary types designed for specific use cases, clear citations with note titles, authors, and timestamps, recency weighting to prioritize newer information, structured must-include blocks like allergies, anticoagulants, critical labs, recent imaging impressions, and the current problem list, and allowing “not found” rather than guessing. Ultimately, a good EHR summary is less about polished prose and more about traceable provenance.

In-basket and Clinician Messaging Drafts

Inbox drafting offers clear efficiency gains, but it is deceptively risky if implemented without strong safeguards. The safest approach is to treat drafting as a second step that only happens after proper triage. In this workflow, the system first categorizes the message type such as symptoms, refill requests, results questions, or administrative issues then suggests routing to the appropriate team before drafting a reply in patient-safe language. GenAI fits well here because inbox volume is high and patterns are repetitive, and even routing alone can reduce clinician workload before drafting begins. However, humans must remain firmly in control: clinicians or staff approve and edit every response, escalate urgent issues, and retain sole authority over medication changes. In practice, safety depends on urgency tiering before drafting, red-flag escalation triggers that disable drafting and force routing, policy-limited macros for high-risk topics, and strict prohibitions against medication changes, dosing advice, or diagnostic statements. Tone guardrails also matter, especially banning absolute reassurance language such as “definitely,” “no need to worry,” or “guarantee.” This is the workflow where confident-but-wrong language can cause the most harm, so escalation logic must be correct otherwise, it should not be shipped.

Discharge Instructions

Discharge instructions are both a high return opportunity and a high harm surface because they sit at the intersection of clinical specificity and patient facing clarity. In this workflow, GenAI converts the clinical plan and discharge orders into clear patient steps, rewrites technical language into simpler phrasing, and standardizes formatting across clinicians and units. The fit is strong because clinicians often document in clinical shorthand, while patients need structured, readable instructions with consistent organization. Templates also help reduce variability across departments. Even so, humans remain fully responsible for RN or clinician sign off, verifying medications, doses, durations, and follow up timelines, and confirming that red flag escalation instructions are accurate. Failures tend to occur when the system hallucinates a dose or duration, contradicts the active medication list, omits important red flag symptoms, or lists the wrong follow up timeframe. Preventing harm requires grounding only to structured sources such as the active medication list, discharge orders, and scheduled follow ups, using slot based templates with fill in the blank constraints, running contradiction checks against medications and orders, relying on an approved red flag library rather than model authored warnings, and never auto sending instructions without verified sign off. If a discharge tool can freely improvise, it is not ready for production use.

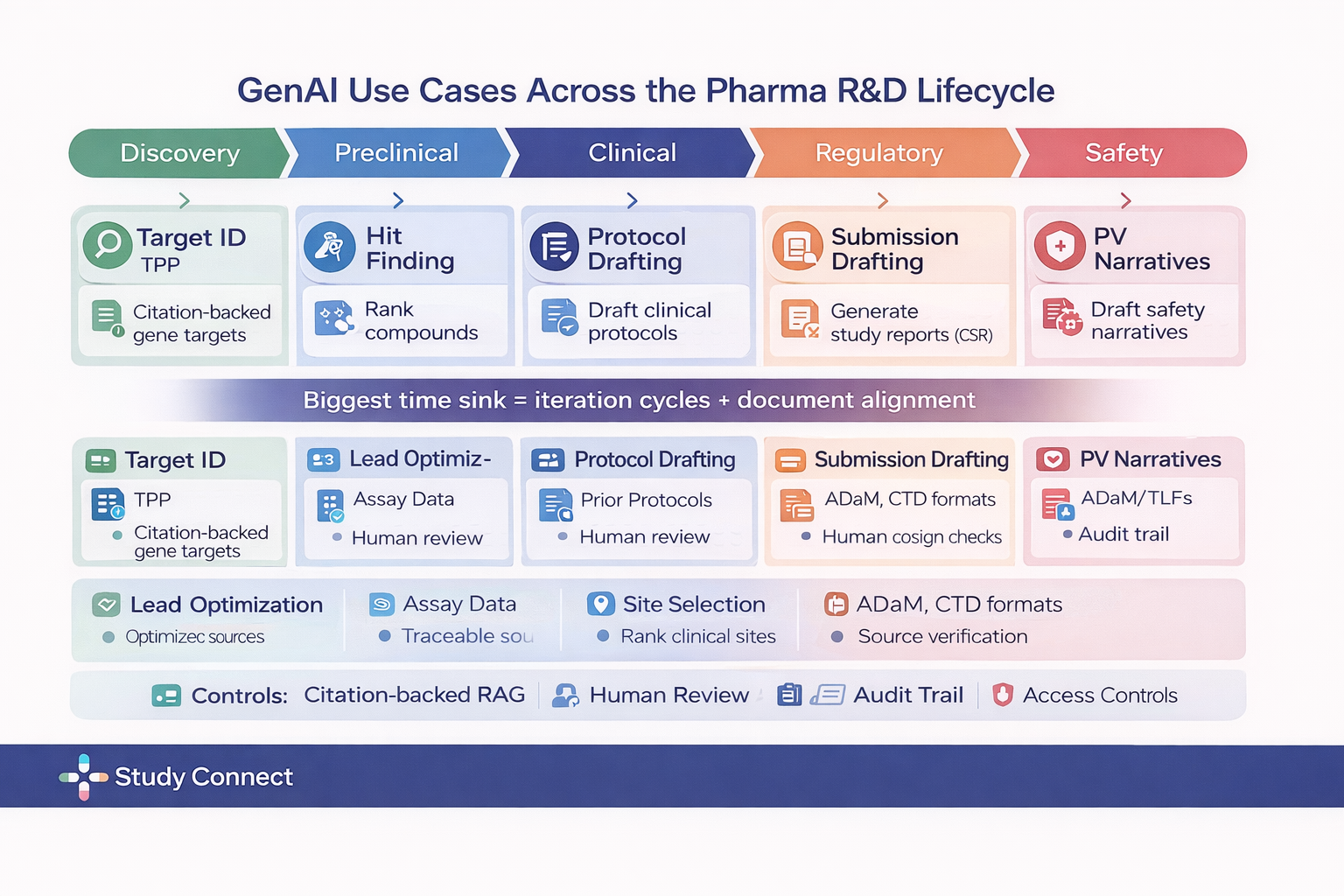

Prior Auth Submissions and Appeal Letters

Prior authorization workflows are repetitive, rules based, and document heavy, which makes them a strong match for constrained GenAI drafting combined with structured checklists. In practice, these systems can draft prior auth submissions and appeal letters, extract supporting chart evidence such as failed step therapy, prior medications, or contraindications, map the content to payer specific requirements, and assemble an evidence pack with traceable facts. GenAI fits well because the volume of similar letters is high and outcomes are easy to measure through turnaround time, denial rates, and resubmission cycles. While the direct clinical harm risk is lower than in patient facing instructions, the financial impact is significant, so accuracy still matters. Humans remain responsible for billing and prior auth team approval, coding confirmation in an assist only capacity, and final submission. Safe implementations depend on guardrails such as evidence packs linked to chart facts including visit dates, labs, and imaging, payer rule checklist gates that block finalization if required fields are missing, strict prohibitions against invented guidelines or fabricated citations, coding validation flags for CPT and ICD discrepancies, and ongoing audits with sampling review.

Adoption data reinforces that this is already a budgeted operational category: 46% of hospitals and health systems use AI in revenue cycle management operations (AI broadly), per an AHA Market Scan citing an AKASA/HFMA Pulse Survey (Jun 4, 2024) . This does not mean all of that is GenAI, but it shows revenue cycle management is already an AI investment line, making GenAI assisted letters a practical next step.

Clinical Trial Documents and Medical Writing

Trial operations are documentation heavy and template driven, which makes them a strong fit for controlled GenAI drafting with strict review processes. In this setting, GenAI can support protocol drafting based on approved templates and prior protocols, assist with amendment drafting and change tracking, generate first drafts of monitoring reports and adverse event narratives, help create patient friendly consent explanations with oversight, and provide structured support for sections of clinical study reports, never in an unsupervised manner. The fit is practical because document bottlenecks often slow trial timelines, teams already rely on standardized sections, and established review layers such as medical writing, quality assurance, and regulatory review are built into the workflow. Humans remain fully responsible for expert sign off, QA checks, maintaining locked templates and controlled source libraries, and submitting final regulatory documents. The main risks include fabricated citations, missing constraints related to endpoints, study populations, or safety reporting rules, and inconsistent terminology across sections. Effective mitigations require retrieval only from approved internal document stores, mandatory citation requirements, and strict review and version control. In regulated environments, standards must be high, and if a vendor cannot demonstrate version control along with traceable source attribution, it should not be permitted to draft regulated trial documents.

Teams use StudyConnect to find clinicians, trial investigators, and domain experts to validate whether a proposed genAI workflow matches real protocol, monitoring, and consent processes before building.

Internal Knowledge Search

Internal knowledge search is often the fastest felt improvement for clinicians, but it must be grounded, permissioned, and testable to be safe in production. In this workflow, GenAI helps clinicians find policy answers quickly such as referral rules or documentation standards, pull patient context without hunting across scattered notes, and summarize retrieved snippets into a cited response. GenAI fits well because clinicians ask questions in natural language, and the system can retrieve and compress relevant passages, saving real time while building adoption through earned trust. Humans still remain responsible for verifying citations, deciding clinical actions, and recognizing when the system should abstain rather than guess. In practice, production ready retrieval augmented generation setups separate corpora between patient EHR data and organizational policies, enforce permissions as the user rather than retrieving as a superuser, chunk documents by note sections while preserving metadata like author, timestamp, and note type, apply time windows and recency weighting, and use hybrid search combining BM25 keyword retrieval with vector semantic search plus reranking. Many teams also enforce a strict no citation, no display policy. Evaluation targets commonly include Retrieval Recall@10 goals of 0.85 to 0.95, grounding rates at or above 0.95 where claims are supported by citations, and critical error rates at or below 1 to 2 percent on high risk test sets. Safety testing often includes PHI leakage prompts, restricted policy access prompts, and stale information traps where older plans conflict with newer updates. These measurable gates are practical because they translate trust into clear production thresholds.

Design patterns that separate serious products from demos (guardrails that prevent harm)

The best healthcare genAI tools are not chat. They are systems.

They constrain output, show sources, and build in refusal and approvals.

How to Roll Out Generative AI in Healthcare Safely

Generative AI in healthcare is not just about model quality. It is about governance, workflow design, and measurable safeguards. Safe deployment requires grounding, constraints, human review, monitoring, and clear escalation paths.

Grounding and Citations: No Citation, No Display

If an output is factual, it must be traceable. In healthcare, that means citation down to the specific note and timestamp. Strong citation design includes the note title, author, and timestamp, encounter ID when relevant, lab name with collection time, imaging impression with date and time, and policy section heading with version or effective date. A strict rule simplifies governance: if there is no citation, do not display the output.This approach reduces hallucinations and eliminates vague summaries that cannot be audited. For teams building governance frameworks around AI risk, helpful references include the NIST AI Risk Management Framework (https://www.nist.gov/ai) and WHO guidance on AI in health (https://www.who.int).

Templates, Constraint Slots, and Contradiction Checks

Templates are not a limitation. They are a safety mechanism. They prevent creative improvisation by forcing the model to fill only allowed fields and leave blanks when evidence is missing. In discharge workflows, this might include structured medication tables, follow-up lists, red flag sections, and activity restrictions. In prior authorization letters, it may include payer checklists, evidence sections, and diagnosis codes. Inbox drafting templates may enforce triage categories, urgency tiers, and approved macro blocks. Contradiction checks compare generated content against structured truth. For example, comparing the medication list against drafted discharge instructions, verifying orders against follow-up statements, checking appointment schedules against generated dates, or validating payer requirements against letter content. Dangerous mismatches such as incorrect doses should block output entirely. Lower risk inconsistencies should trigger human review.

Human-in-the-Loop Gates and Audit Logs

In healthcare, human approval is not optional. It is a required safety control. Each workflow needs defined approval gates. Clinical notes require clinician review and signature before chart save. Discharge instructions require RN or clinician sign off before sending. Prior authorization letters require billing or PA team approval. Inbox drafts must be reviewed before sending, with red flags automatically routed to urgent workflows. Audit logs should capture the prompt or context used, the generated text, human edits, and the final action including who approved it and when. This is how incidents are investigated and how systems improve safely over time.

Abstain and Escalation Behavior

Safe tools do not answer everything. An abstain response occurs when the system cannot safely answer due to insufficient evidence or conflicting information. It may state that support was not found in the record while still showing cited context it retrieved. Abstain triggers include low retrieval confidence, missing required template fields, contradictory sources, or high risk topics such as chest pain or suicidal ideation in messaging workflows. Escalation means routing work appropriately. This may include disabling drafting and routing urgent messages to nurse triage, routing policy questions to the appropriate department, or prompting the user to open cited source material for verification. If a GenAI system always produces an answer, it will eventually produce harm.

Implementation Roadmap: Start Small, Measure Clearly

Safe rollouts begin with one well-defined workflow and clear success metrics. Strong starting points include ambient scribe drafting, purpose-built chart summaries, inbox triage plus drafting, prior authorization appeals, or internal policy search. Define measurable outcomes in advance. These may include minutes saved per appointment, same-day note closure rate, prior authorization turnaround time, denial rate changes, clinician satisfaction, grounding rate of cited claims, and critical error rates on high risk test sets. Pilots without metrics cannot scale responsibly.

Data Readiness and Integration Planning

Generative AI fails when it lives outside the workflow. Integration should be planned first. Ambient scribes require audio or transcripts, note templates, and patient instruction formats. Chart summaries require access to notes, labs, medications, imaging impressions, and encounter metadata. Inbox drafting needs portal messages, routing rules, and approved macro libraries. Discharge workflows require structured medication and order data, follow-up scheduling, and red flag libraries. Prior authorization workflows require payer rules, coding fields, and structured evidence extraction. Knowledge search requires EHR and policy corpora, access control lists, and metadata. Integration goals should focus on minimal extra clicks, clear draft labeling, and one tap access to cited source material. For broader regulatory context, teams sometimes reference the FDA AI/ML-Enabled Medical Devices Database to understand how other AI classes are cleared, even though generative documentation tools are not imaging or diagnostic devices (https://www.fda.gov/medical-devices).

Governance Frameworks That Support Adoption

Technology adoption is not just about model performance. It is about workflow fit and trust. The Technology Acceptance Model emphasizes perceived usefulness and perceived ease of use. Does the system reduce after-hours documentation? Does it reduce search time? Does it reduce message handling time? Does it fit the clinician’s mental model such as SOAP or handoff structure? The NASSS framework helps organizations think about scale and sustainability. It asks whether the tool delivers measurable value, whether the technology is grounded and monitored, whether users are trained and supported, whether governance processes exist, whether regulatory posture is addressed, and whether the system improves over time rather than drifting. These frameworks prevent pilots that work in one clinic but fail at scale.

Evaluation and Monitoring Before and After Launch

Evaluation is ongoing, not a one-time test. Before launch, teams should build offline gold standard datasets for each workflow, conduct red flag prompt testing for messaging, run PHI leakage tests to attempt cross patient retrieval, test stale information traps where older plans conflict with newer plans, measure grounding rates, and perform clinician review sampling using structured error taxonomies. After launch, teams should monitor for drift such as new note types or policy updates, conduct weekly sampling reviews with domain owners, define clear incident escalation paths including the ability to disable features quickly, and maintain feedback loops into prompts, templates, retrieval logic, and user interface design. For enterprise deployment, teams often evaluate platforms designed for privacy controls and auditability, such as enterprise offerings from OpenAI (https://openai.com). Some also track healthcare specific research models such as Google Med-PaLM for medical reasoning research context (https://research.google).

Risks and Limits: A Straight Talk Section

Generative AI can reduce administrative burden, but it introduces new failure modes. Core risks include hallucinations, omissions, privacy exposure, bias, and liability. In daily workflows, hallucinations may appear as invented symptoms or fabricated rationales. Omissions may include missed anticoagulants or allergies. Privacy risks arise when irrelevant PHI is pulled into drafts. Bias may surface through tone or assumptions in patient messaging. Liability risk emerges when incorrect instructions are sent without review. Evidence shows these risks are measurable. A transcript to note study published in Nature npj Digital Medicine reported hallucinations in 1.47 percent of note sentences and omissions in 3.45 percent of transcript sentences, with nearly half of hallucinations rated as major

(https://www.nature.com/articles/s41746-025-01670-7.pdf). The appropriate response is not avoidance. It is structured safeguards mapped to each risk. Grounding with citations and template constraints reduces hallucinations. Must include blocks and checklists reduce omissions. Permissions enforced as the user and patient scoped retrieval reduce privacy exposure. Tone rules and review sampling mitigate bias. Human approval gates and escalation logic reduce liability.

Vendor and Contract Checklist

Before purchasing any solution, ask direct questions and document the answers. Will the vendor sign a Business Associate Agreement? What is their data retention and deletion policy? Is customer data used for model training by default? Where is data stored and how is it encrypted? Do they support permissions as the user? How do they prevent cross patient retrieval? Can sensitive note types or departments be restricted? Do they provide grounding with citations? What is their grounding rate on customer like tasks? How do they measure critical error rate? What is their abstain behavior? Do they provide detailed audit logs including generated text, edits, and final send or save actions? What is their incident response and breach notification process? How do they monitor drift over time? If a vendor cannot answer these questions clearly, the product is not ready for healthcare production.

If you need a compact stats box, these are credible and defensible:

- 85% of 150 US healthcare leaders were exploring or had already adopted genAI capabilities in a Q4 2024 survey, while 15% had not started proof-of-concepts (McKinsey, Mar 26, 2025) (https://www.mckinsey.com/industries/healthcare/our-insights/generative-ai-in-healthcare-current-trends-and-future-outlook).

- 42% of medical group leaders report using an ambient AI solution (MGMA, Summer 2024) (https://www.mgma.com/getkaiasset/b02169d1-f366-4161-b4d6-551f28aad2c9/NextGen-AmbientAI-Whitepaper-2024-final.pdf).

- Physician self-reported usage: 21% use AI for documentation of billing codes/medical charts/visit notes; 20% for discharge instructions/care plans/progress notes (AMA article Feb 26, 2025; based on 2024 responses) (https://www.ama-assn.org/practice-management/digital-health/2-3-physicians-are-using-health-ai-78-2023).

- 46% of hospitals/health systems use AI in revenue cycle management operations (AI broadly), per AHA Market Scan citing an AKASA/HFMA Pulse Survey (Jun 4, 2024) (https://www.aha.org/aha-center-health-innovation-market-scan/2024-06-04-3-ways-ai-can-improve-revenue-cycle-management).

- Real operational pilot metrics (ambient scribe example from the prompt): 10.3 -> 8.2 minutes per appointment, same-day closure 66.2% -> 72.4%, after-hours 50.6 -> 35.4 minutes/day, baseline Apr-Jun 2024, measured after 7 weeks.

Examples of Generative AI in Healthcare Companies

Buyers usually evaluate categories, not brand claims. Here are the main categories doing production work:

- Ambient scribe vendors (documentation drafting)

- EHR-integrated chart summarization tools

- Patient portal / in-basket draft and routing tools

- Prior auth automation and appeal drafting tools

- Clinical trial document drafting and medical writing copilots

- Internal knowledge search platforms (EHR + policies) with grounded answers

If you are building or buying in any of these categories, you may need fast access to clinicians, billing specialists, trial investigators, and compliance experts to validate workflows. StudyConnect is built to help teams find and speak with verified experts early, so you can confirm what is safe, what is realistic, and what will be adopted before you scale a pilot. Reach out to [email protected] for further support.

Where "artificial intelligence in medicine courses" help:

- Clinicians: how to supervise outputs and spot clinical failure modes

- Ops and billing: evidence extraction, compliance checks, audit readiness

- Compliance and risk: governance frameworks (NIST AI RMF concepts, monitoring)

- This is less about becoming an AI engineer and more about learning safe supervision habits.

Generative AI is already working in healthcare when it drafts text inside constrained workflows. It fails when it is asked to be a clinician, or when teams skip grounding, permissions, and review gates. If you are building or buying, the fastest path is still simple:

- Pick one workflow moment

- Define success and safety metrics

- Design citations, constraints, escalation, and audit logs from day one

- Validate the workflow with real experts before scaling

Jessica Mega, MD, MPH, captured the right attitude: "I encourage people to push [AI] for the unknown." I agree with the push, but not with reckless pilots. If a tool cannot show sources, enforce permissions, and prove low critical-error rates on hard test cases, it should not touch patient-facing text.

FAQs

AI-Generated: What are the most practical applications of generative AI in healthcare today (not diagnosis)?

The most reliable applications of generative AI in healthcare today are language-heavy workflows where the output is a draft, not a medical decision. In real clinics and hospitals, this includes ambient documentation (AI scribes that draft SOAP notes from a visit), chart summarization inside the EHR (for example, “ED quick summary” or “pre-op history summary”), and in-basket messaging drafts that help clinicians respond faster. Other high-ROI areas include discharge instruction rewriting into patient-friendly language, prior authorization packets and appeal letters, and clinical trial document support (protocol drafts, amendments, monitoring reports, and narrative summaries). Generative AI is suited here because these tasks are repetitive, text-based, and measurable. The key guardrail is constant: genAI drafts, but humans decide—clinicians, nurses, and revenue cycle teams review, edit, and approve before anything is filed, submitted, or sent to patients.

AI-Generated: How can AI be used in healthcare safely for discharge instructions and patient messaging?

The safest way to use AI in healthcare for discharge instructions and patient messaging is to treat generative AI as a drafting tool with strict controls. For discharge instructions, the biggest risk is “confident wrong specifics” (dose, duration, restrictions, or follow-up timing). Production systems reduce this by grounding content to structured sources only—active med lists, discharge orders, scheduled appointments—and using templates that limit what the model can fill in. Teams also run contradiction checks against the chart and block outputs when there is a mismatch. For in-basket replies, the safe pattern is triage first, draft second: messages are classified for urgency, red flags trigger escalation, and high-risk topics pull from approved macros instead of free-form text. A human reviewer must always approve before anything is sent. This is how can AI be used in healthcare without turning a draft into unverified advice.

AI-Generated: What should healthcare teams look for in generative AI in healthcare companies before adopting a tool?

When evaluating generative AI in healthcare companies, look beyond model demos and focus on product safety patterns and workflow fit. The best tools stay narrow (draft, summarize, route) and avoid acting like an autonomous clinician. Ask whether outputs are grounded with citations back to the EHR, transcript, or approved policies, and whether the tool can say “not found” instead of guessing. Confirm there is a hard human-in-the-loop step before anything reaches the medical record, a payer submission, or a patient message. You also want audit logs (what was generated, edited, and sent), role-based permissions, and monitoring for errors and drift. Integration matters: tools should live inside the EHR or existing portals with minimal extra clicks. If a vendor cannot explain its guardrails for hallucinations, privacy, and escalation, it is likely not ready for real clinical operations—even if the generative ai in healthcare market hype sounds strong.